AI in Healthcare: Perception and Trust Research

Project Overview

Overview & Role

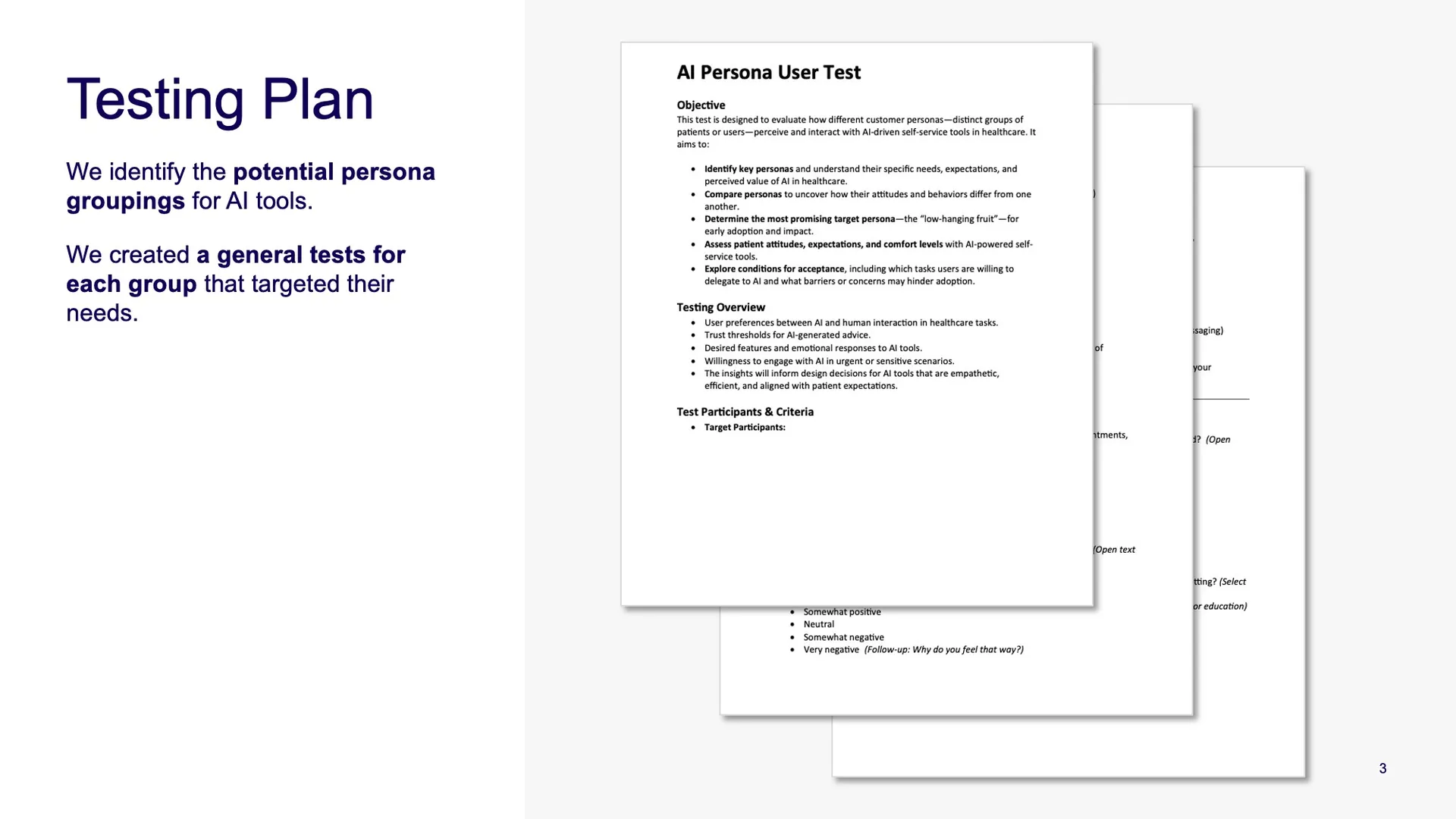

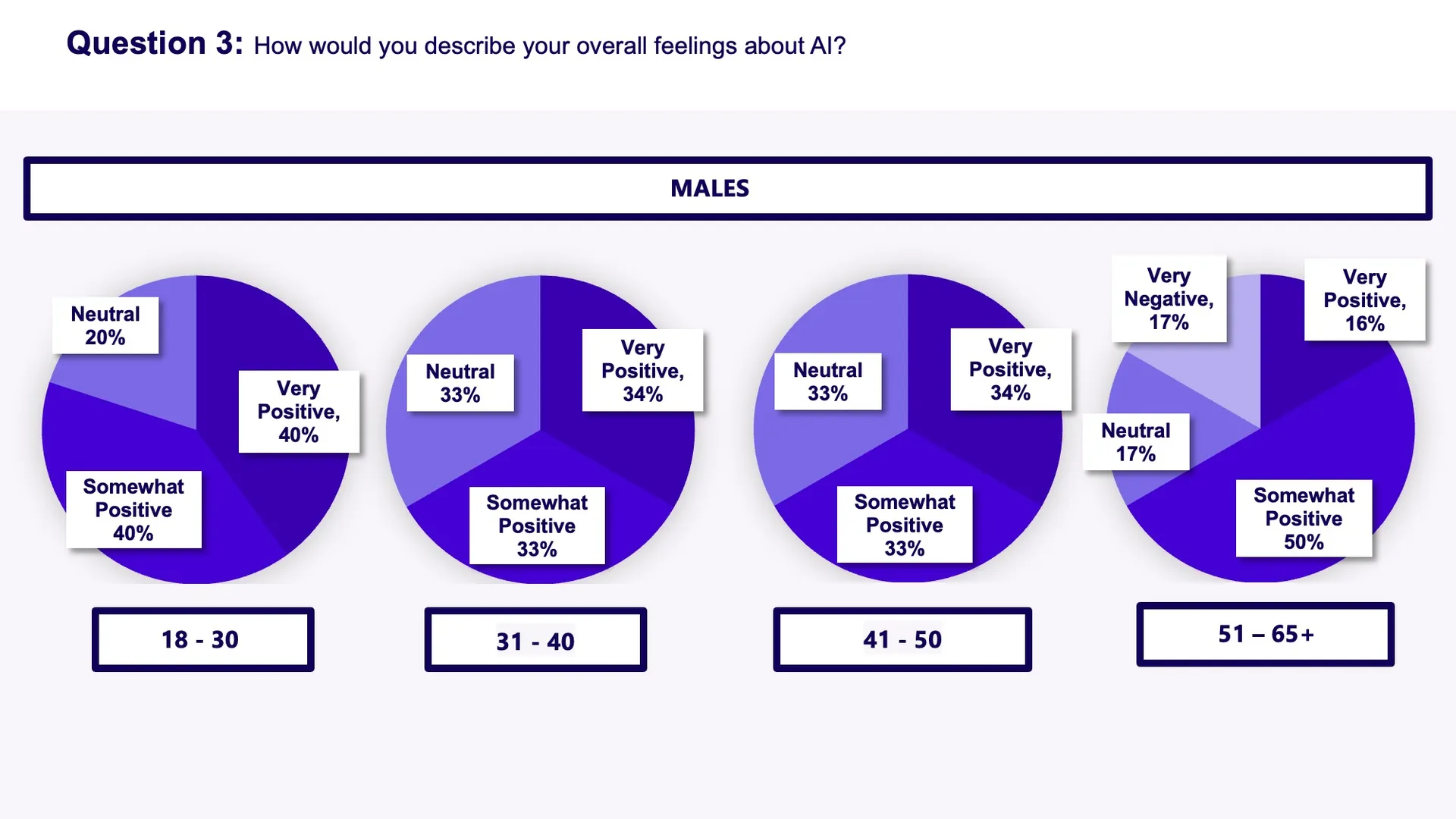

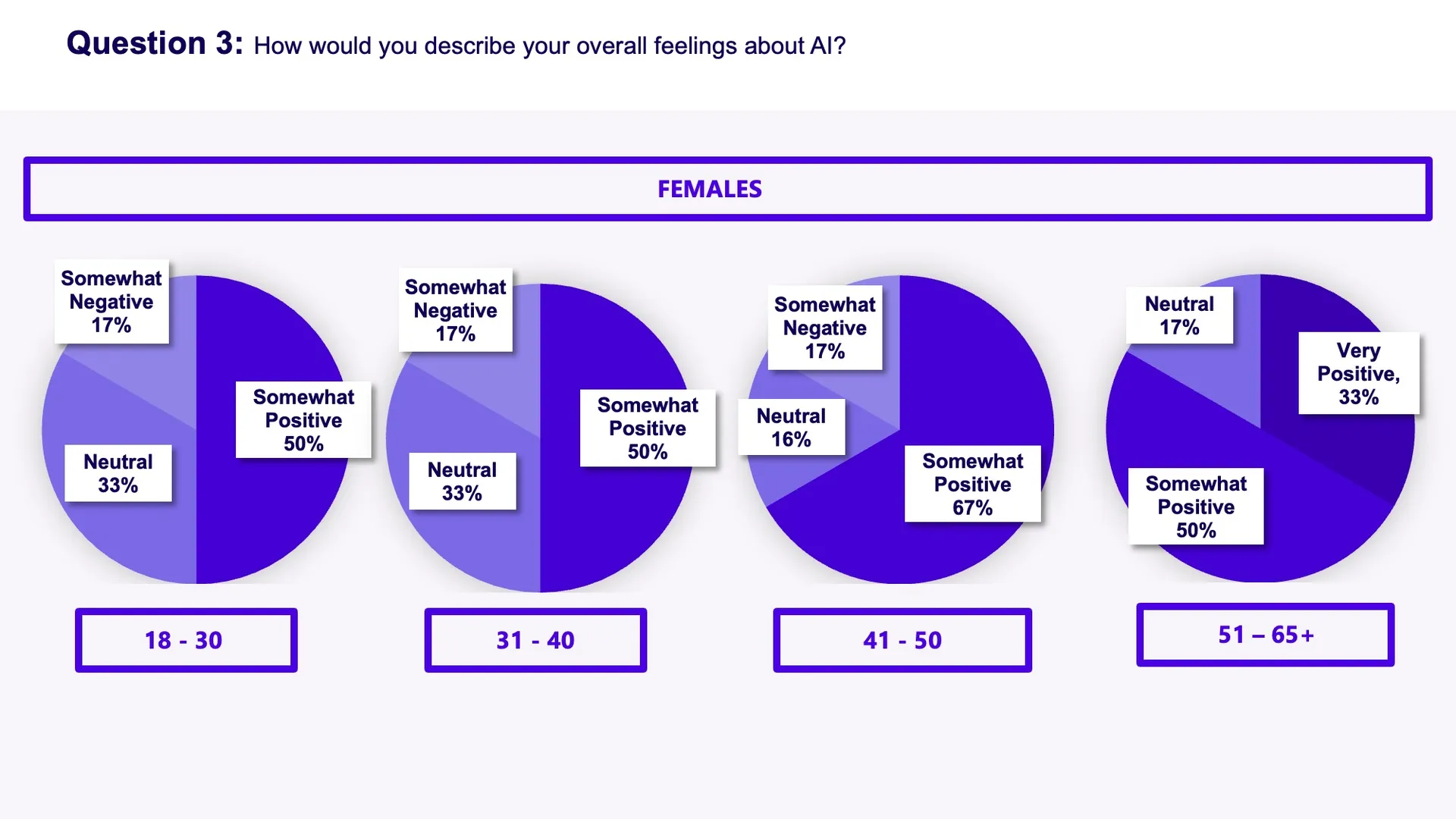

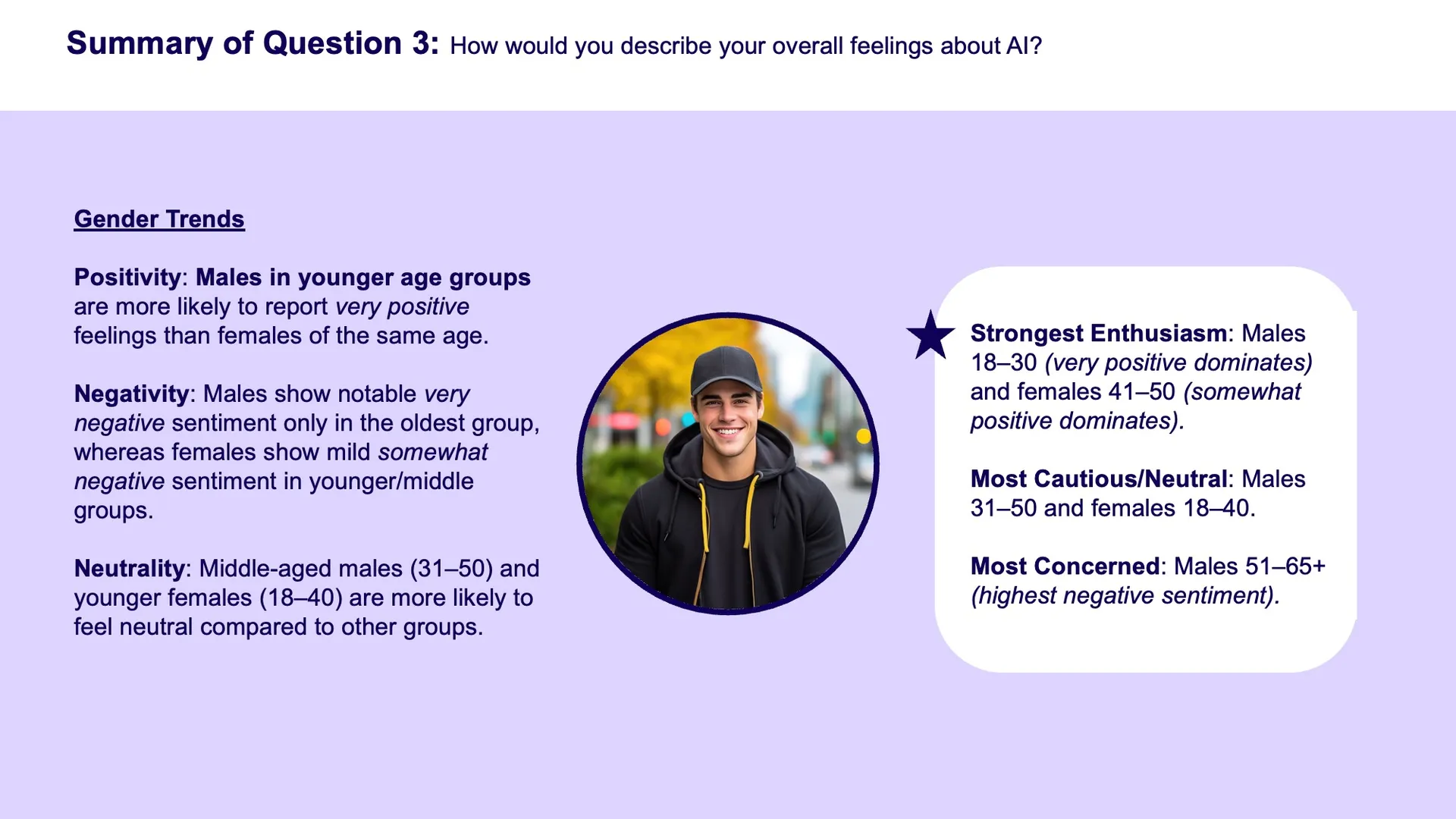

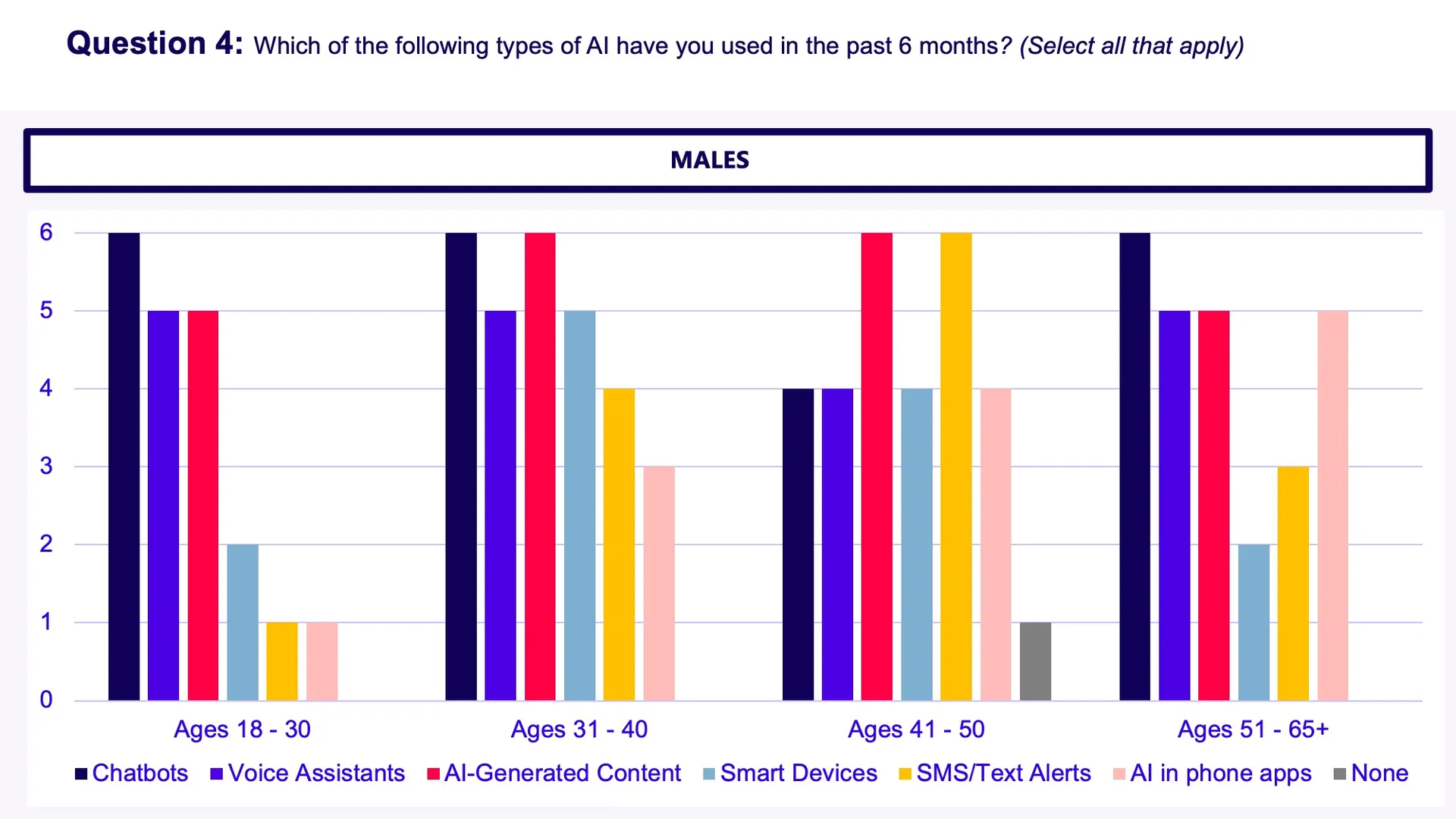

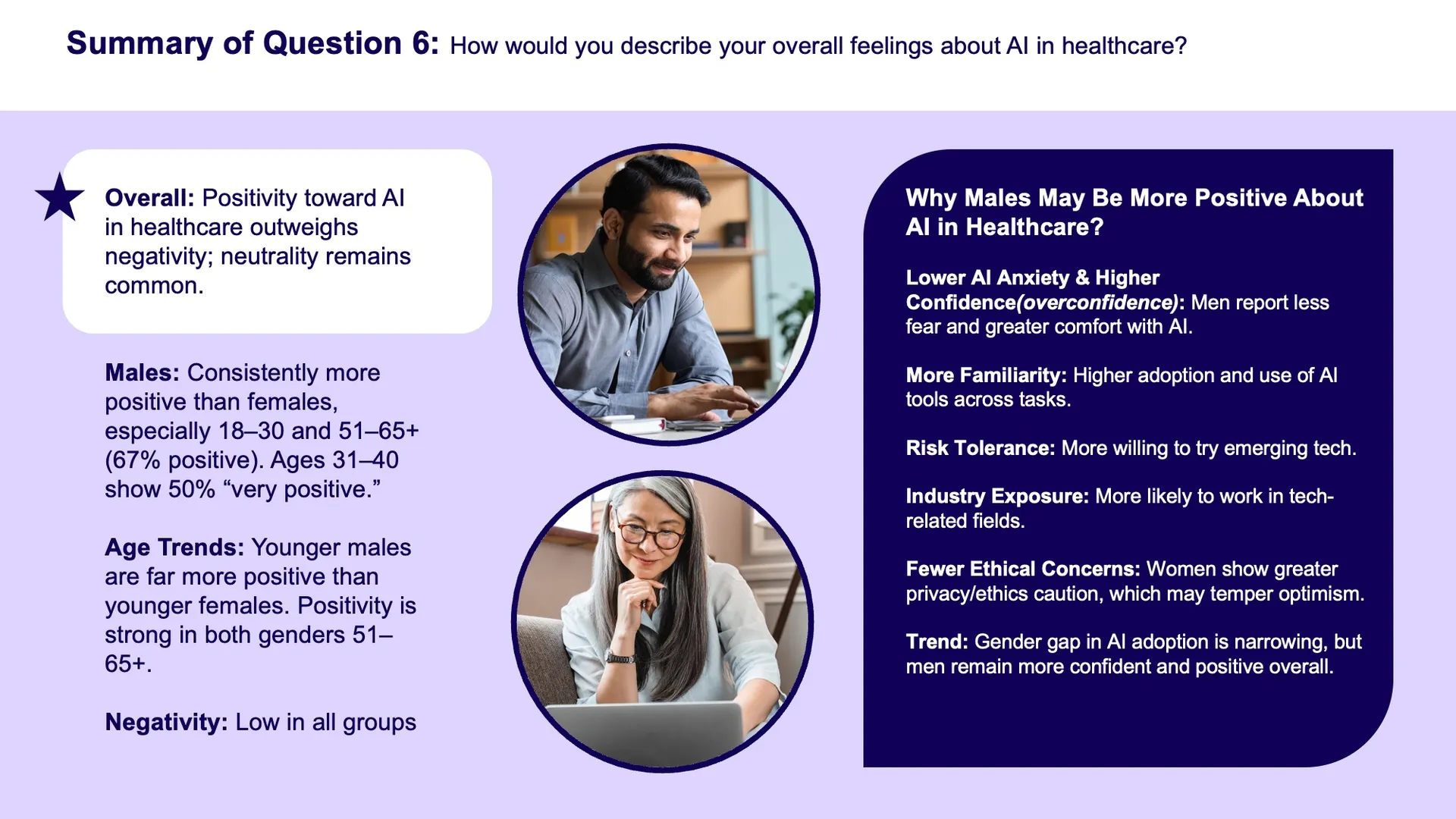

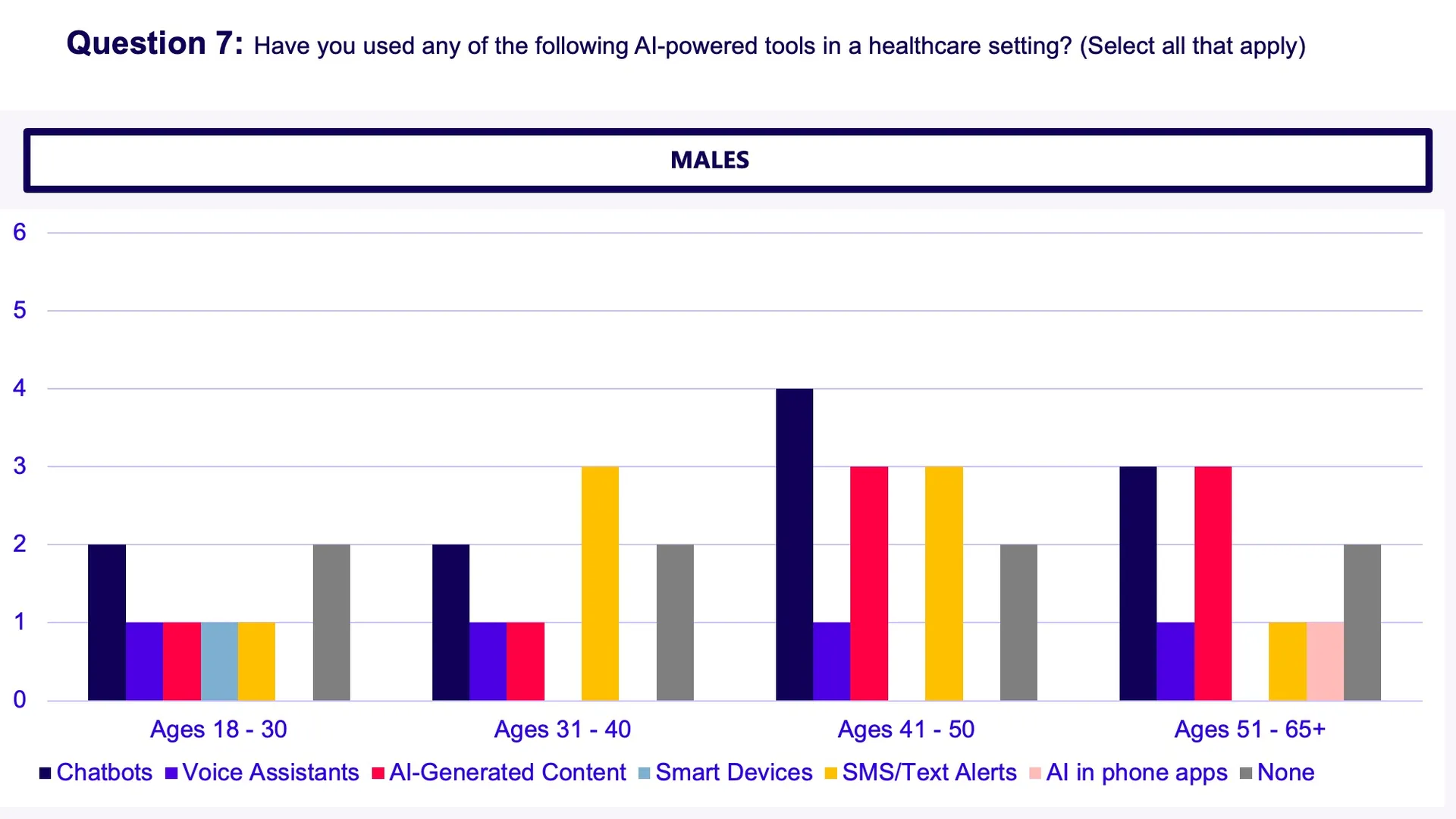

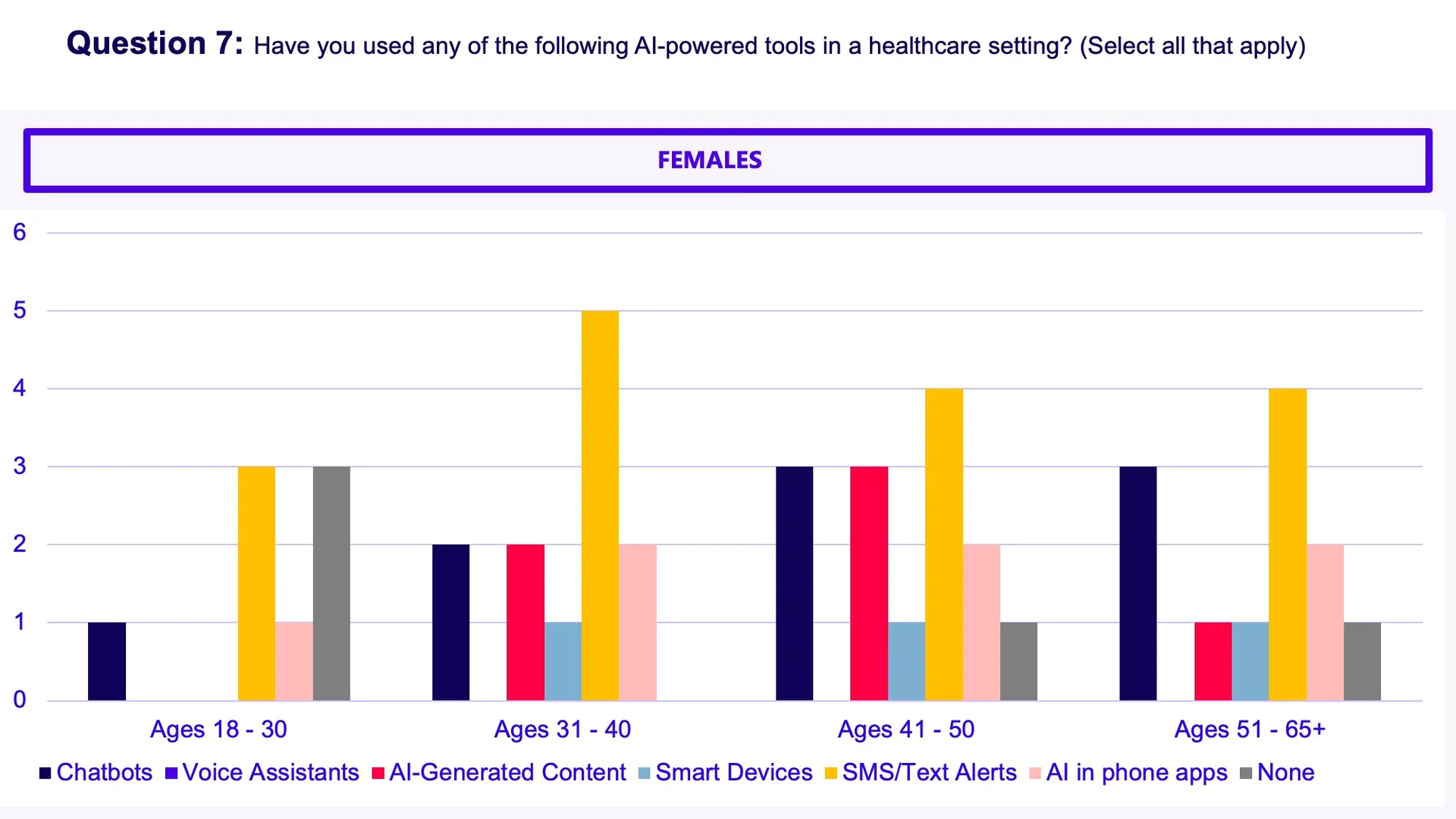

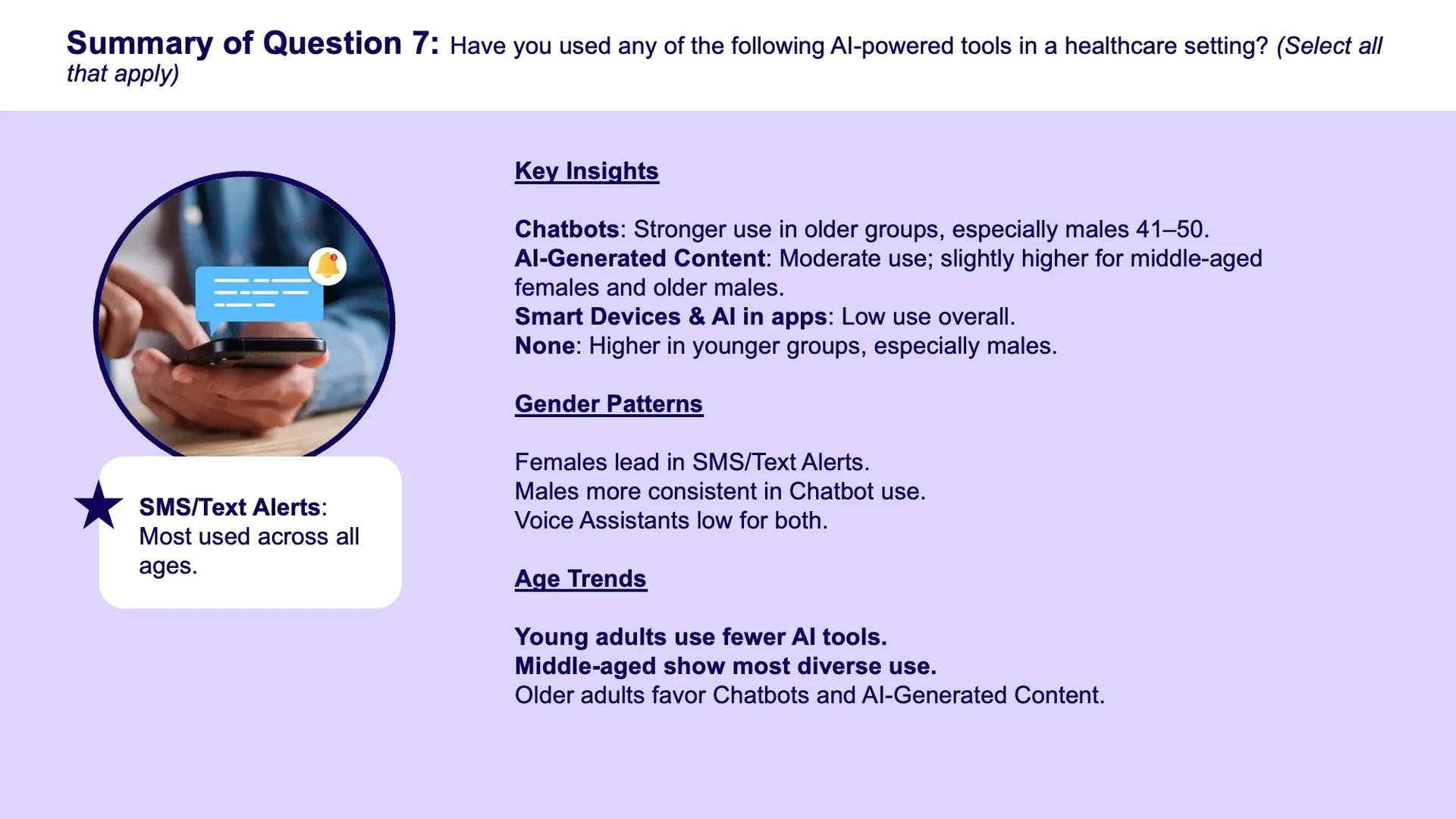

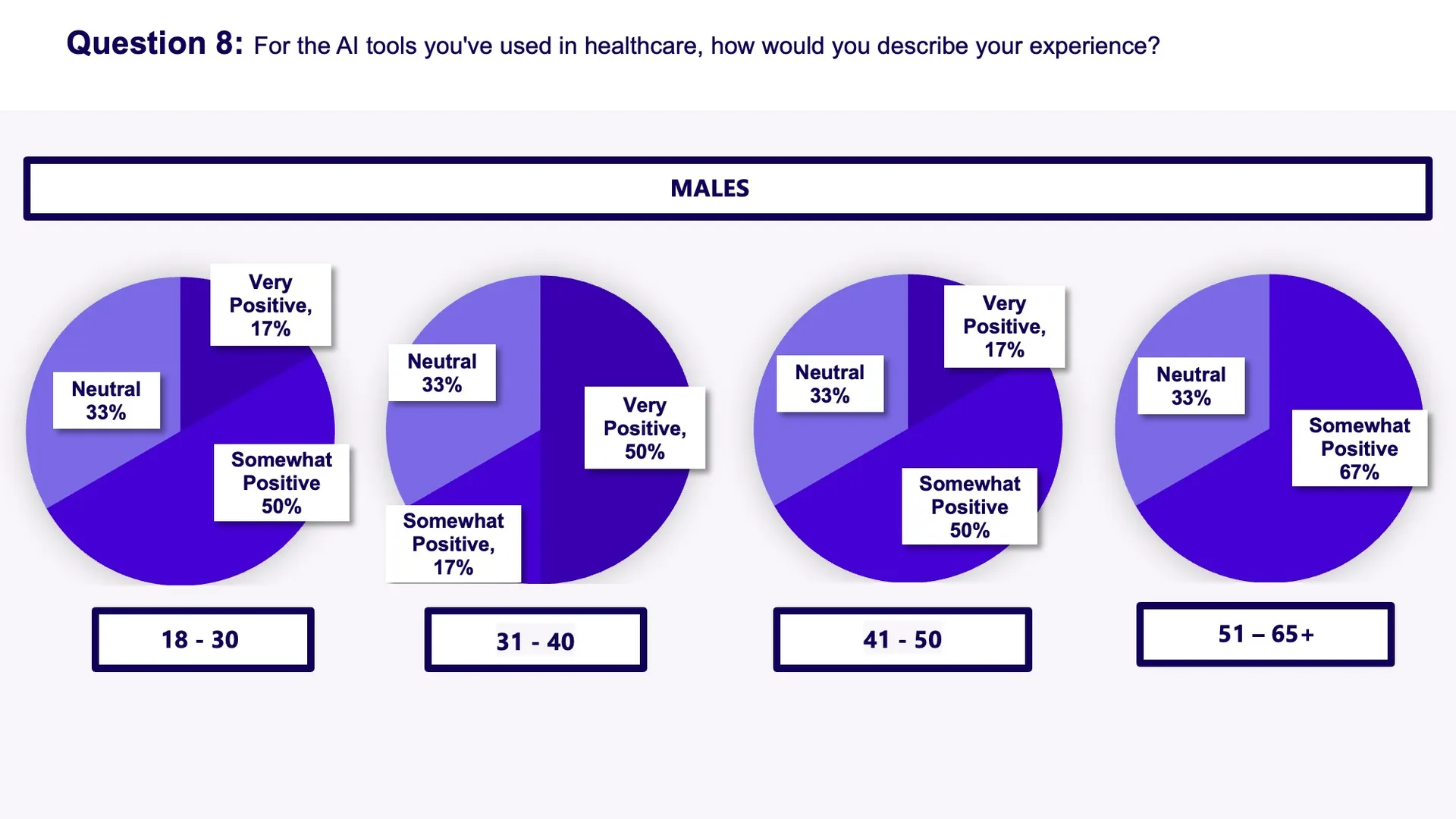

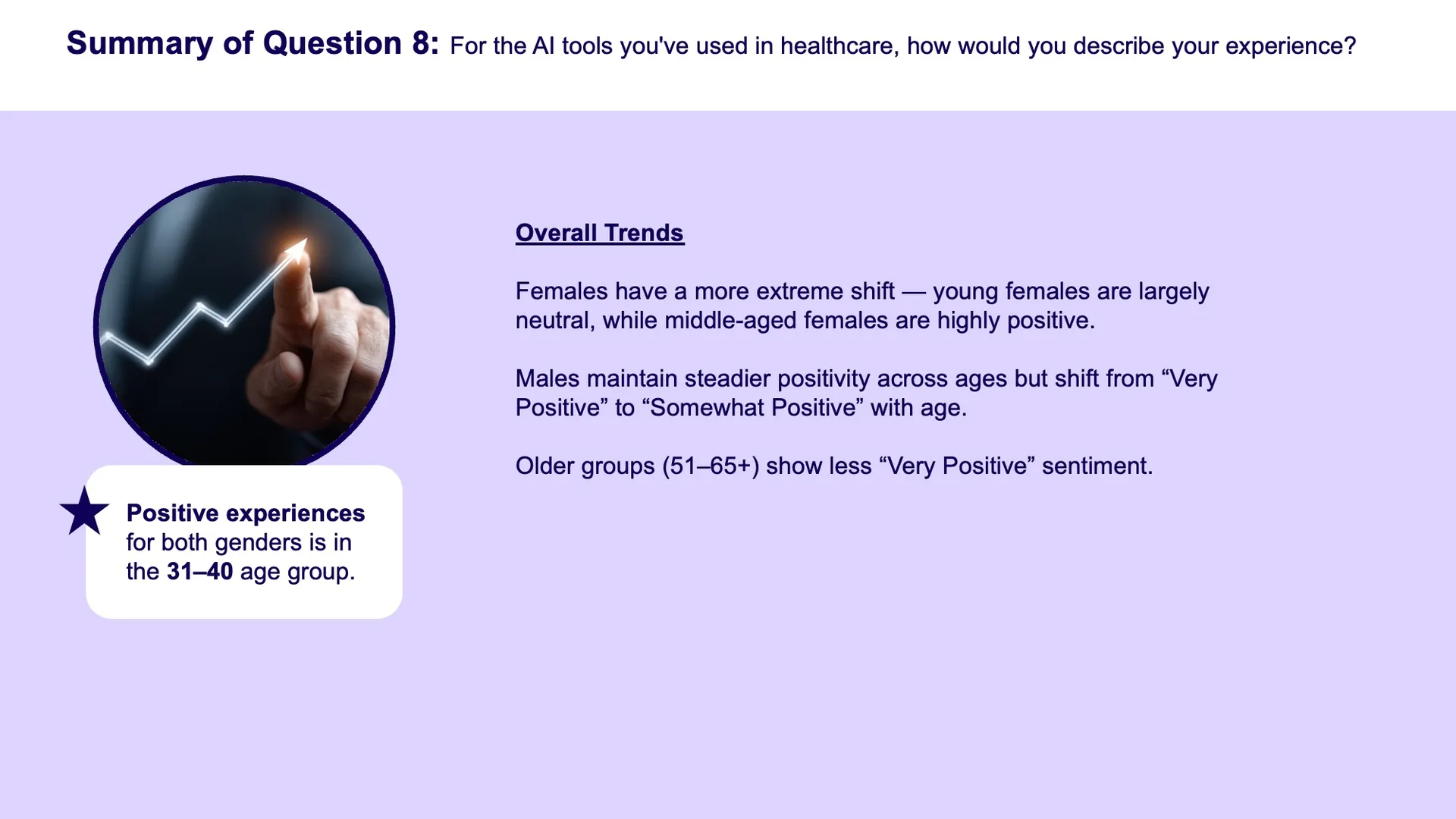

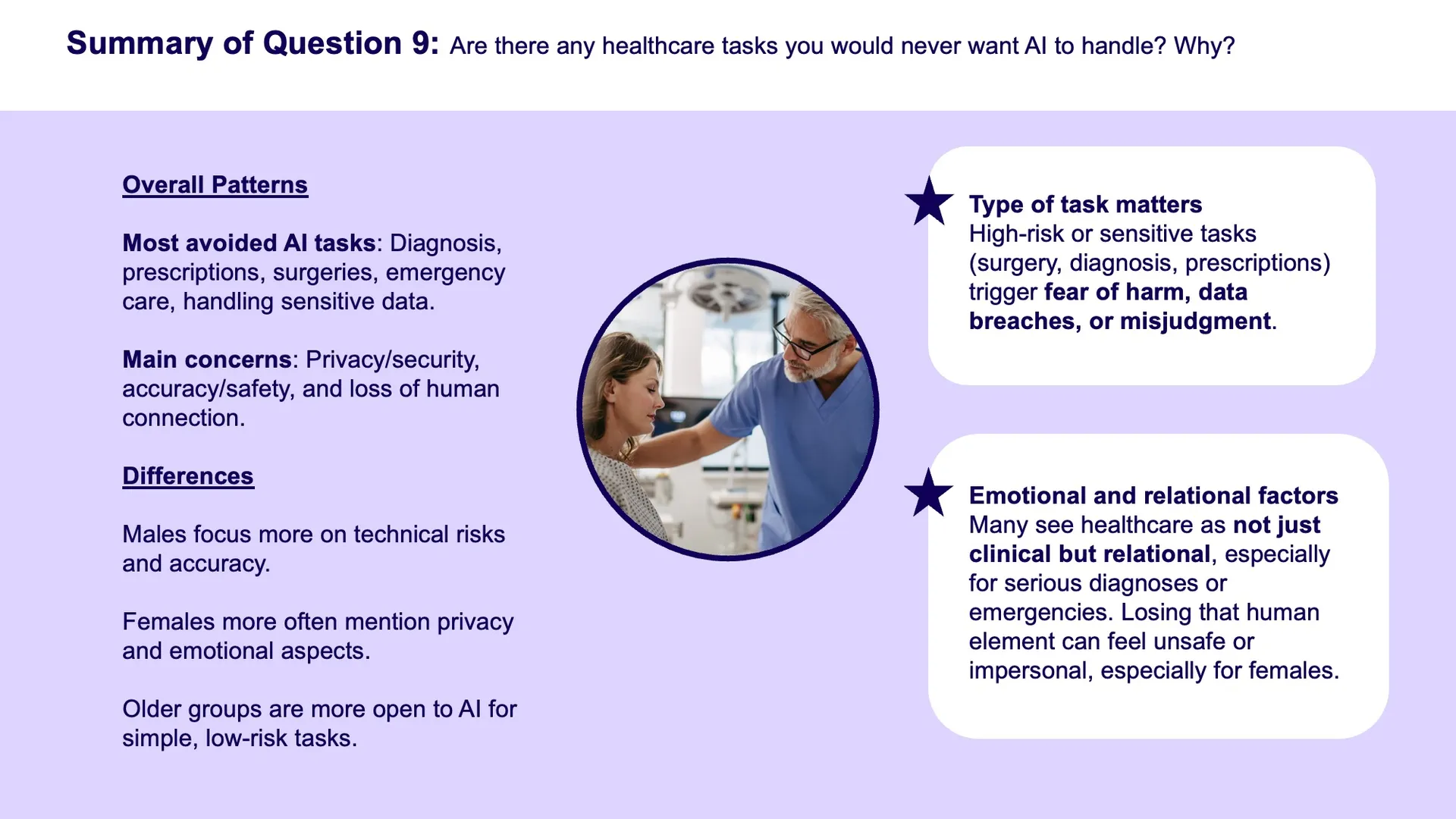

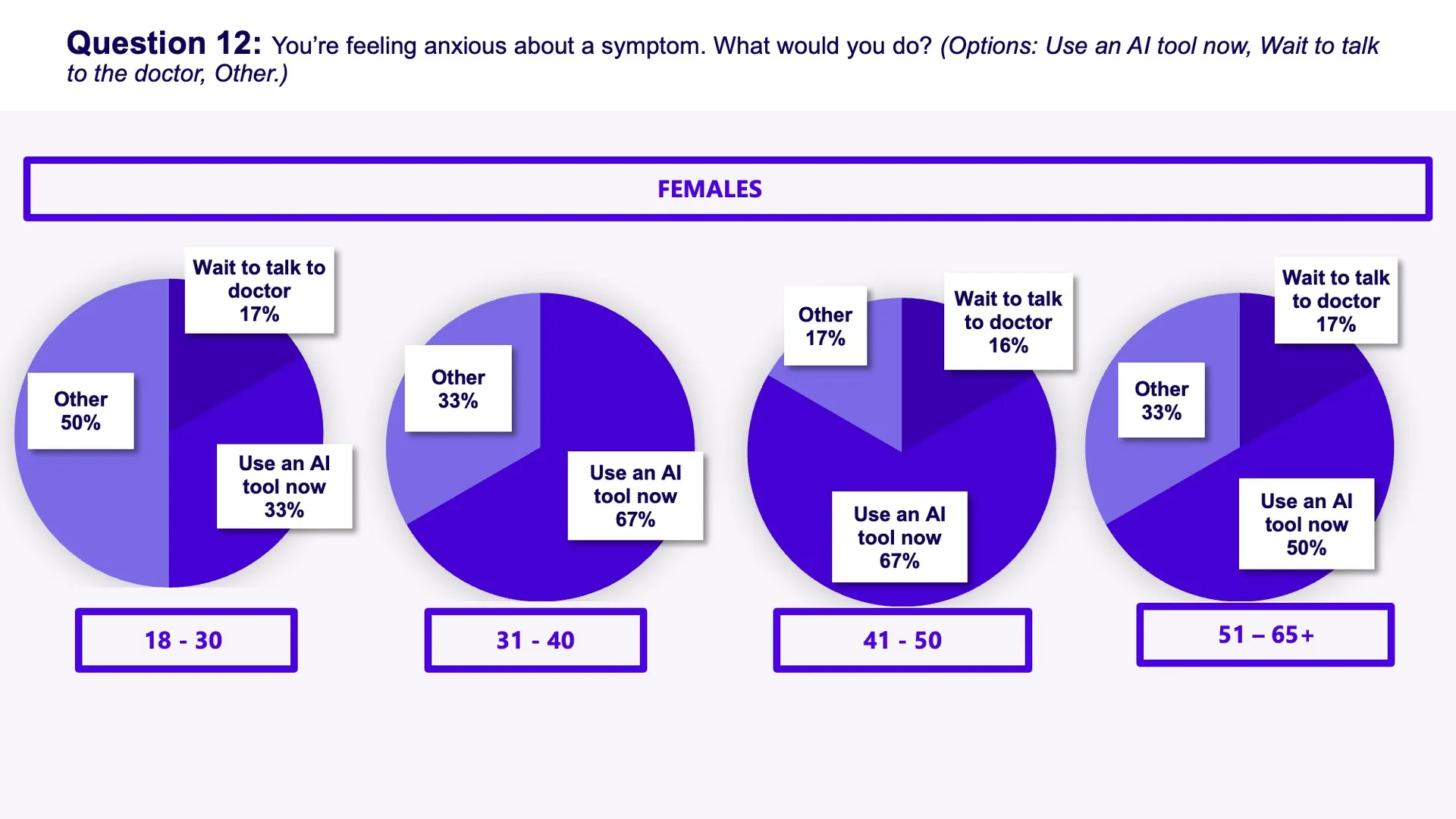

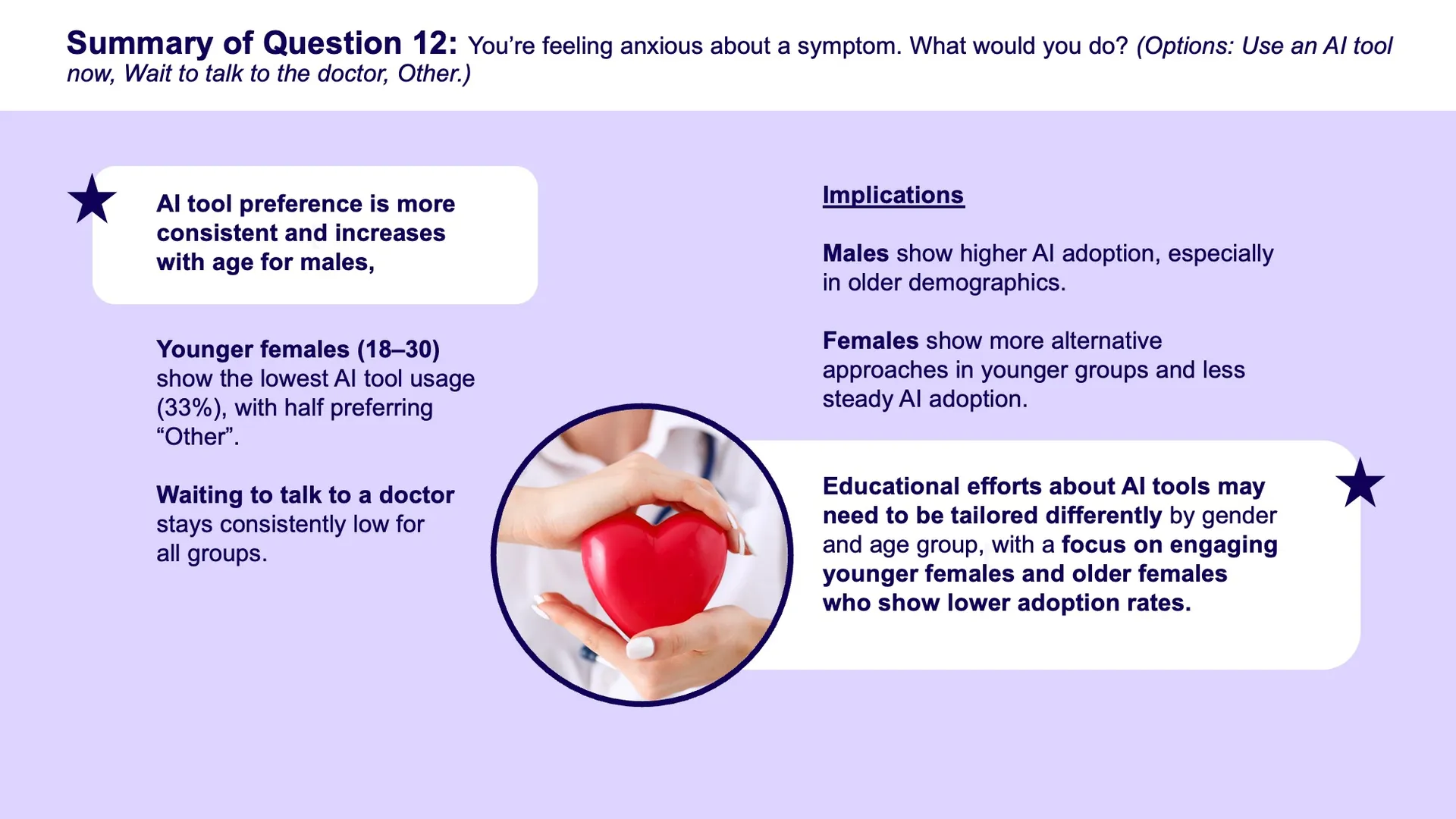

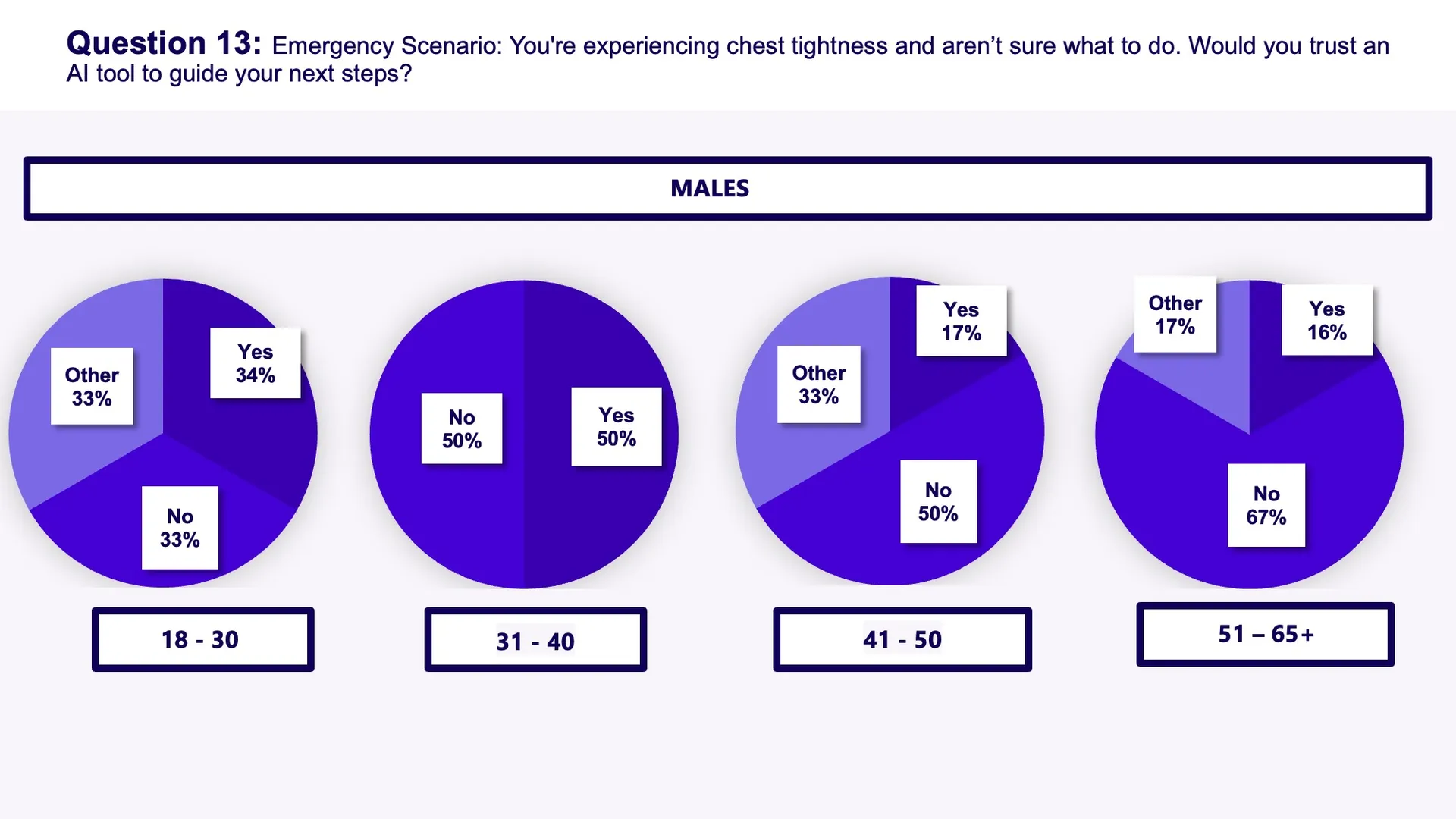

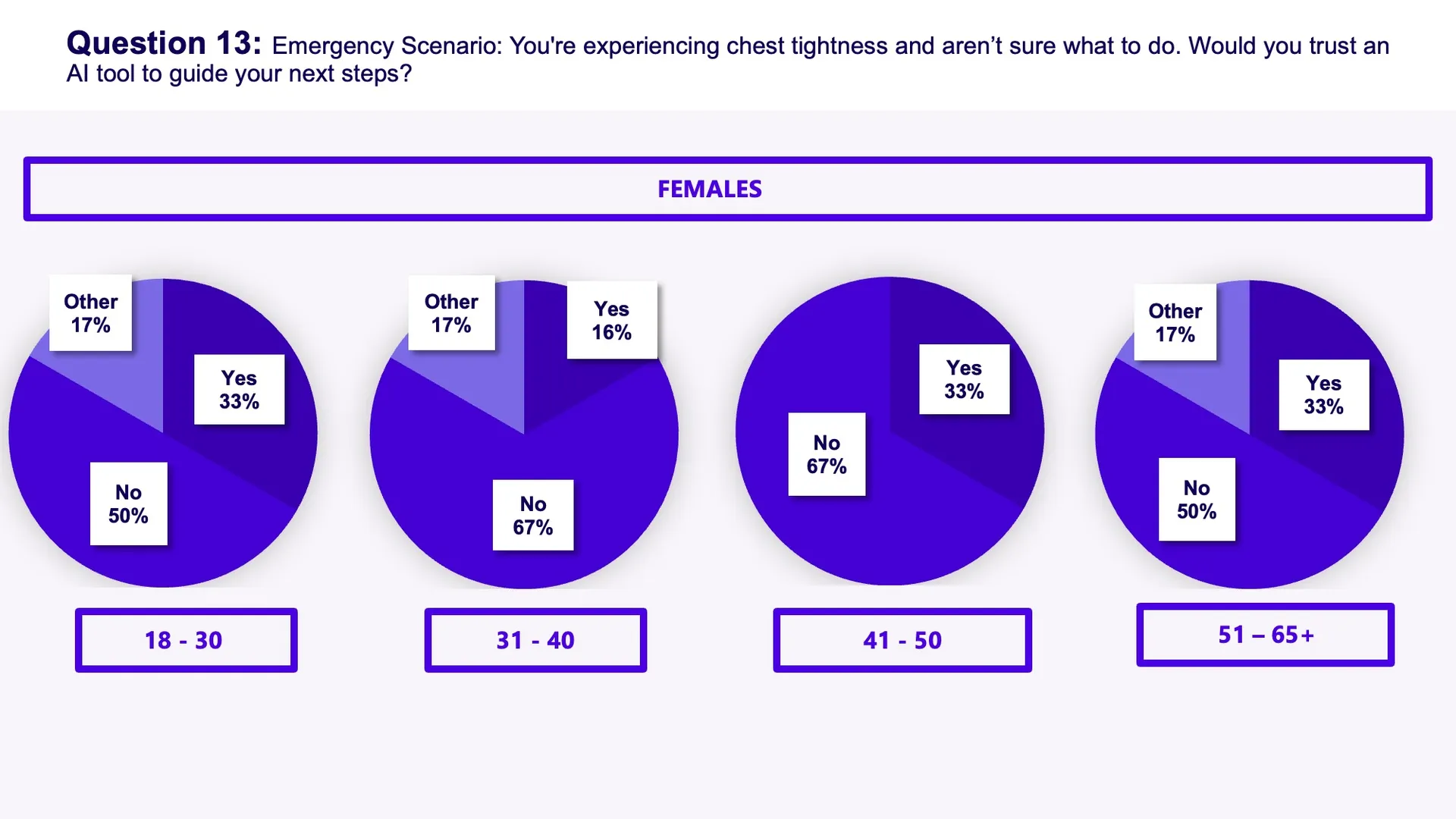

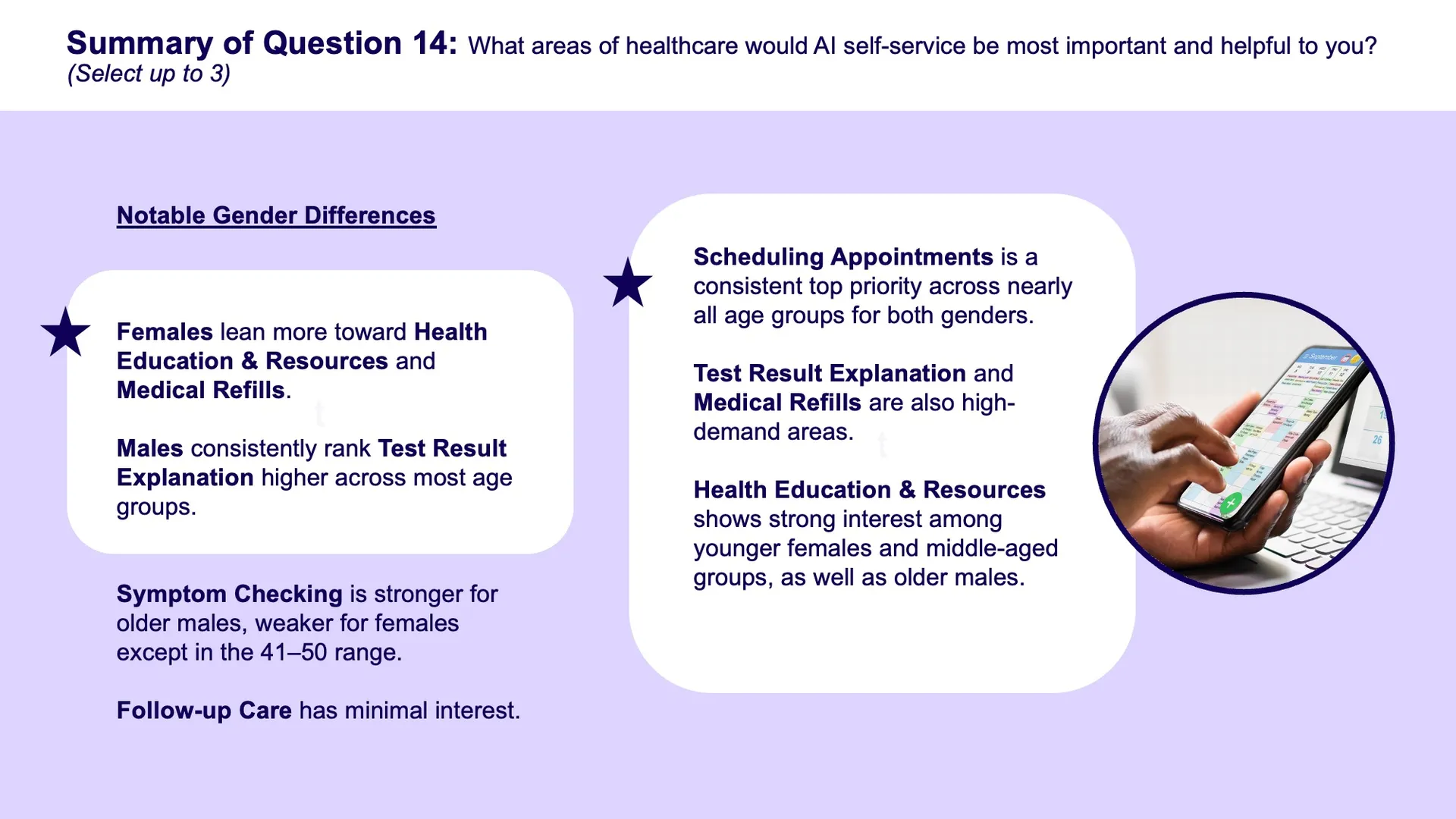

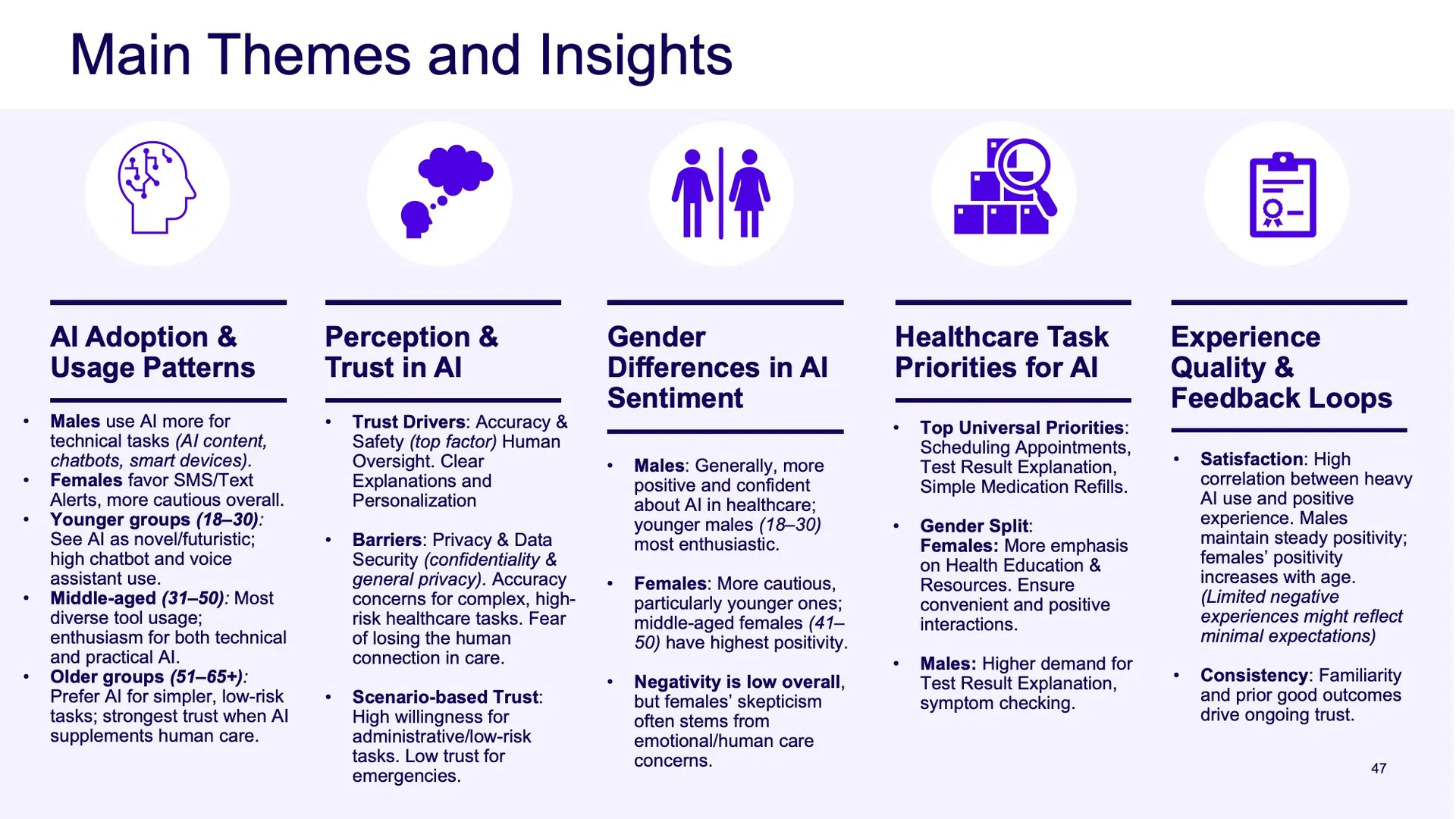

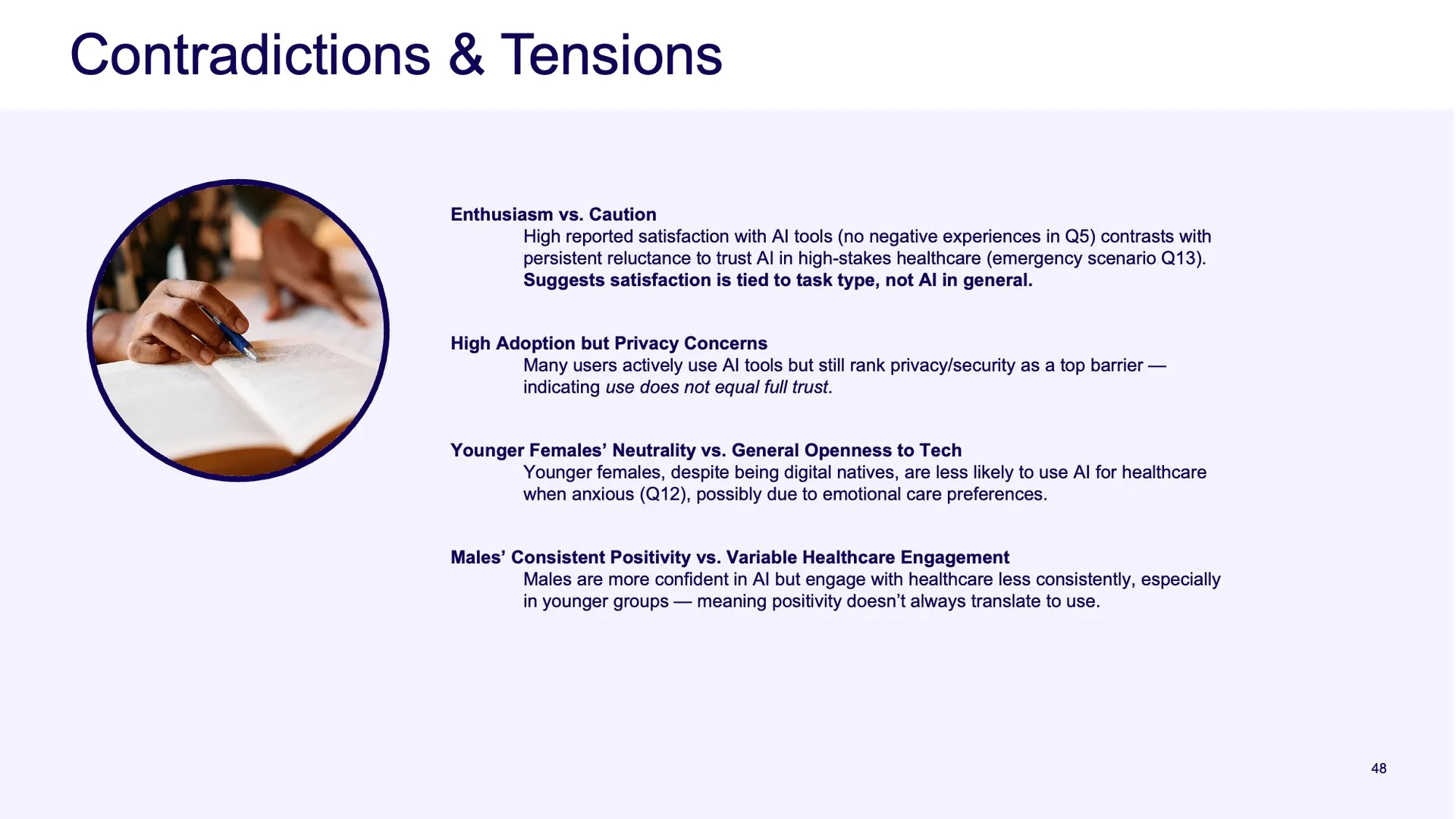

This project showcases a focused research effort on how patients perceive and trust AI within healthcare. Through structured testing and demographic analysis, I uncovered patterns that clarify where AI can responsibly enhance care. These insights now serve as strategic guidance for the digital teams, providing a clearer understanding of user expectations, trust drivers, and adoption barriers. This project also demonstrates my ability to lead with rigor, translate research into actionable direction, and shape organizational thinking around emerging technologies.

Challenge

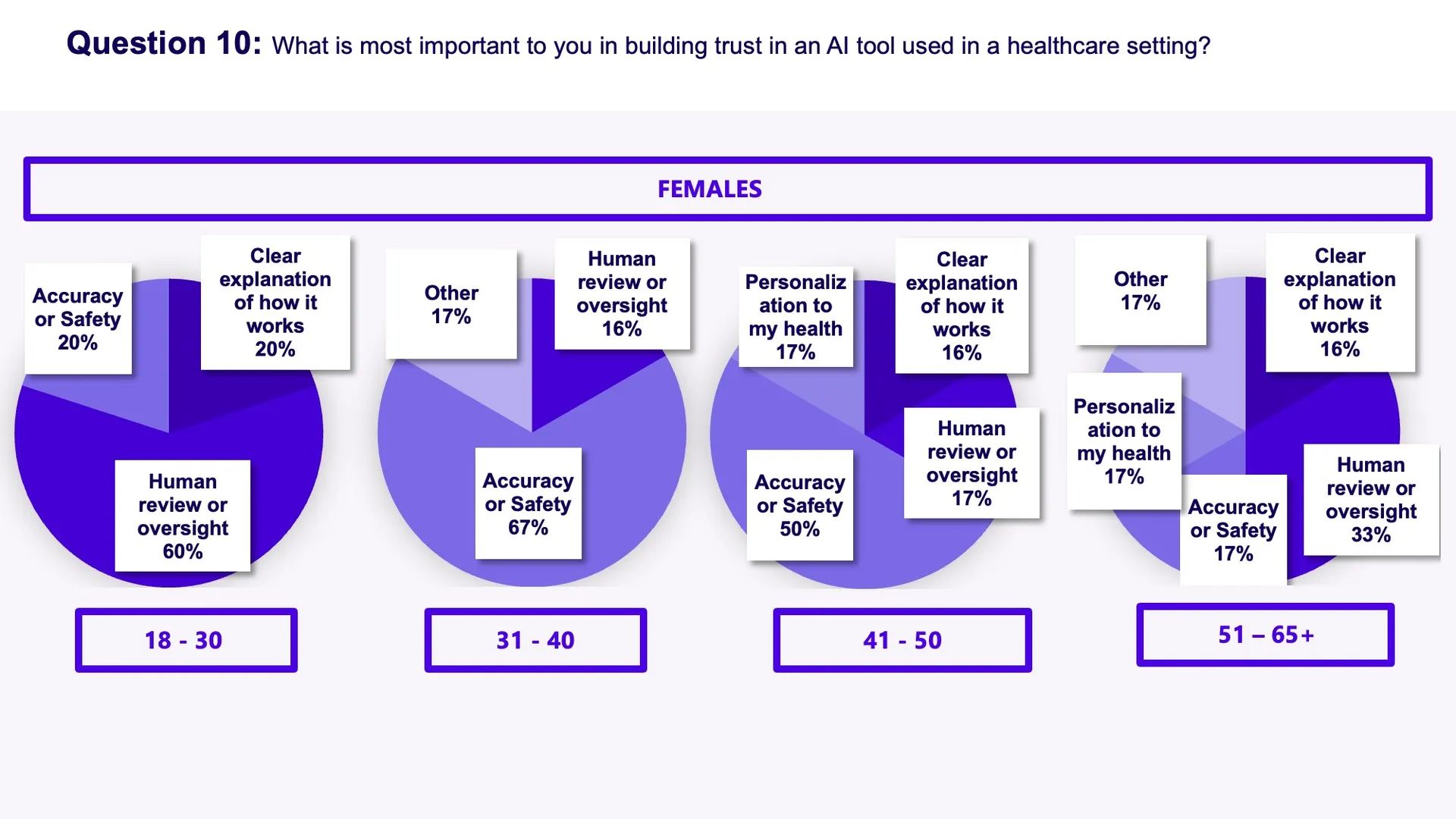

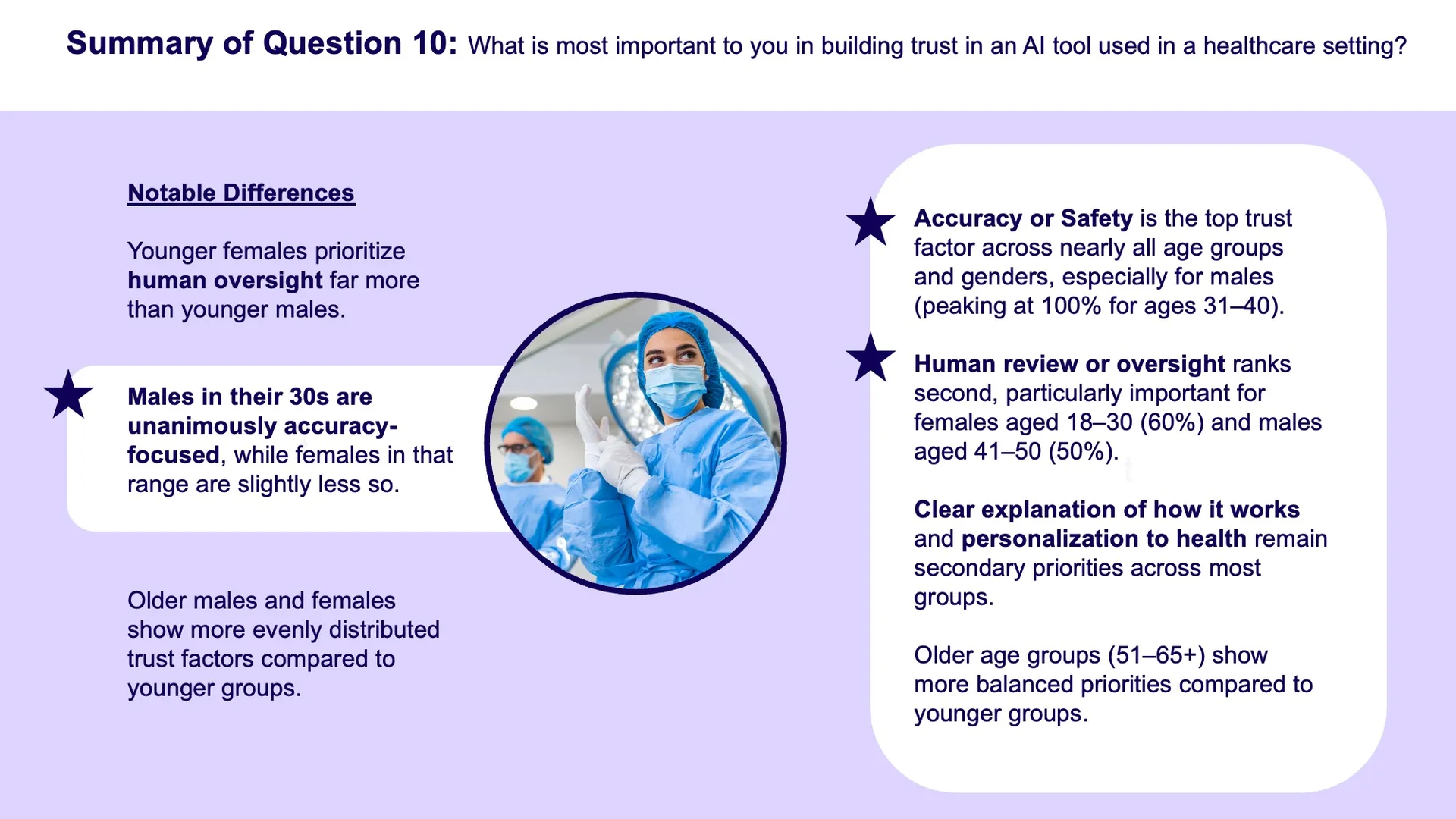

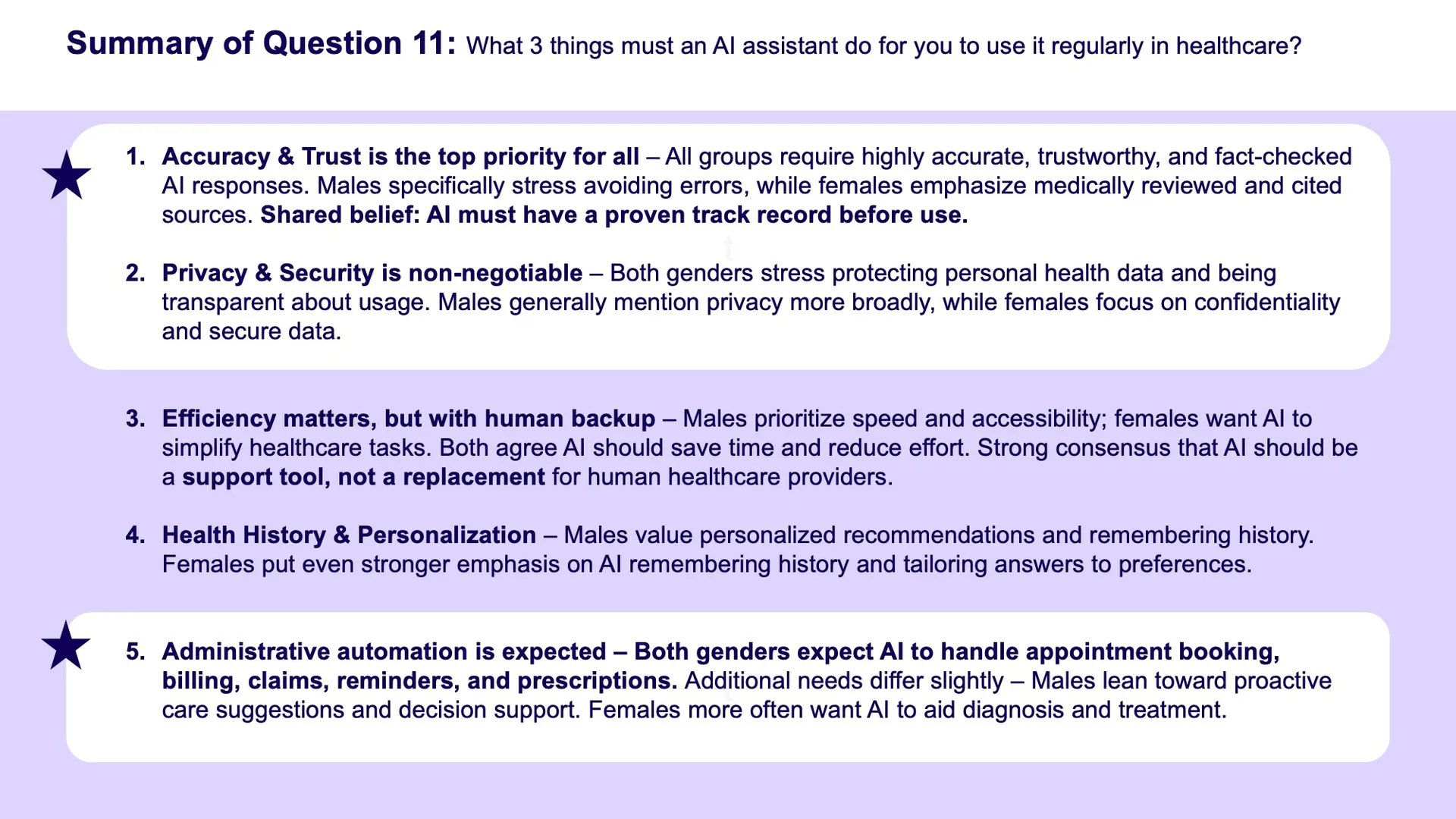

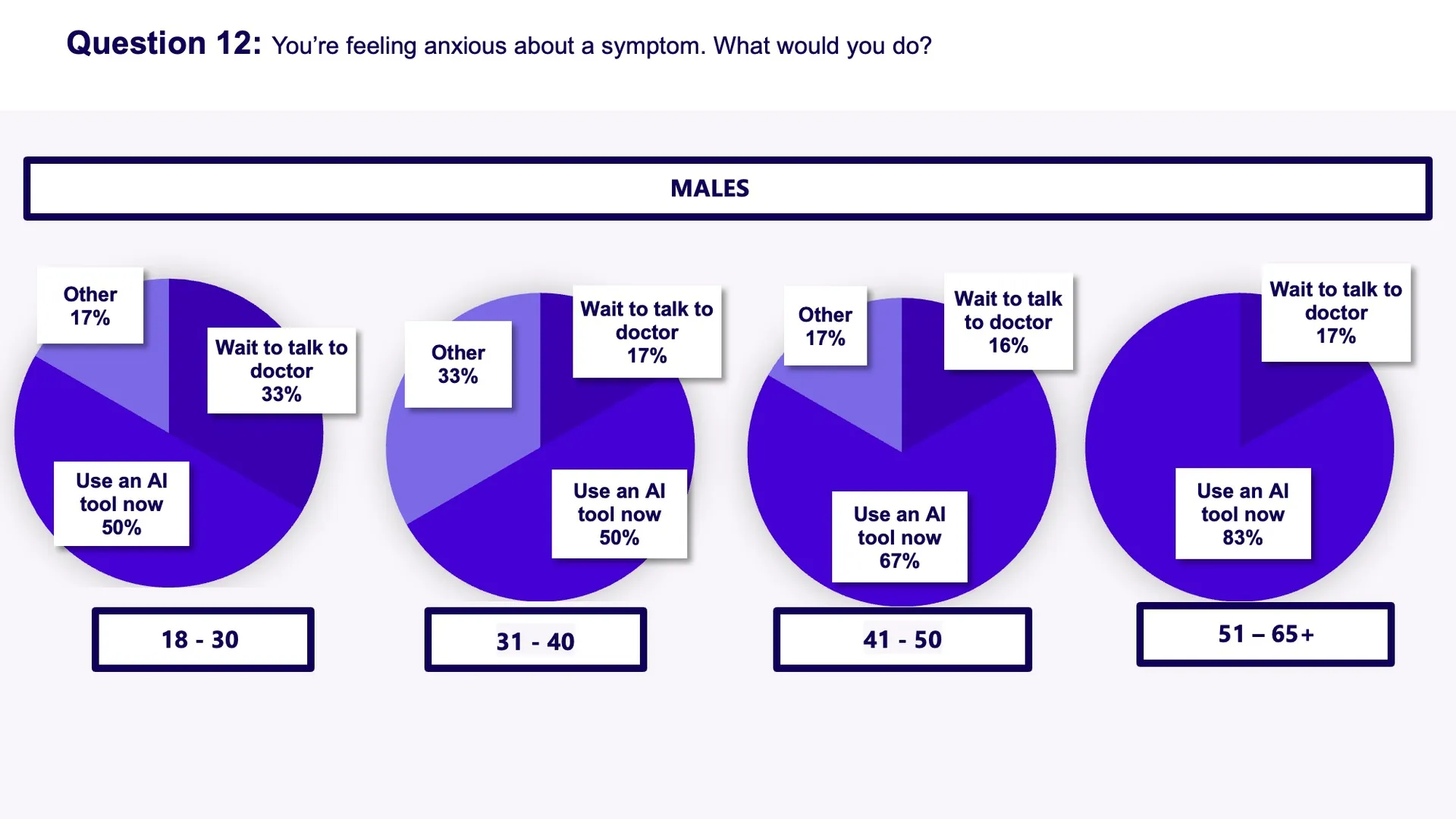

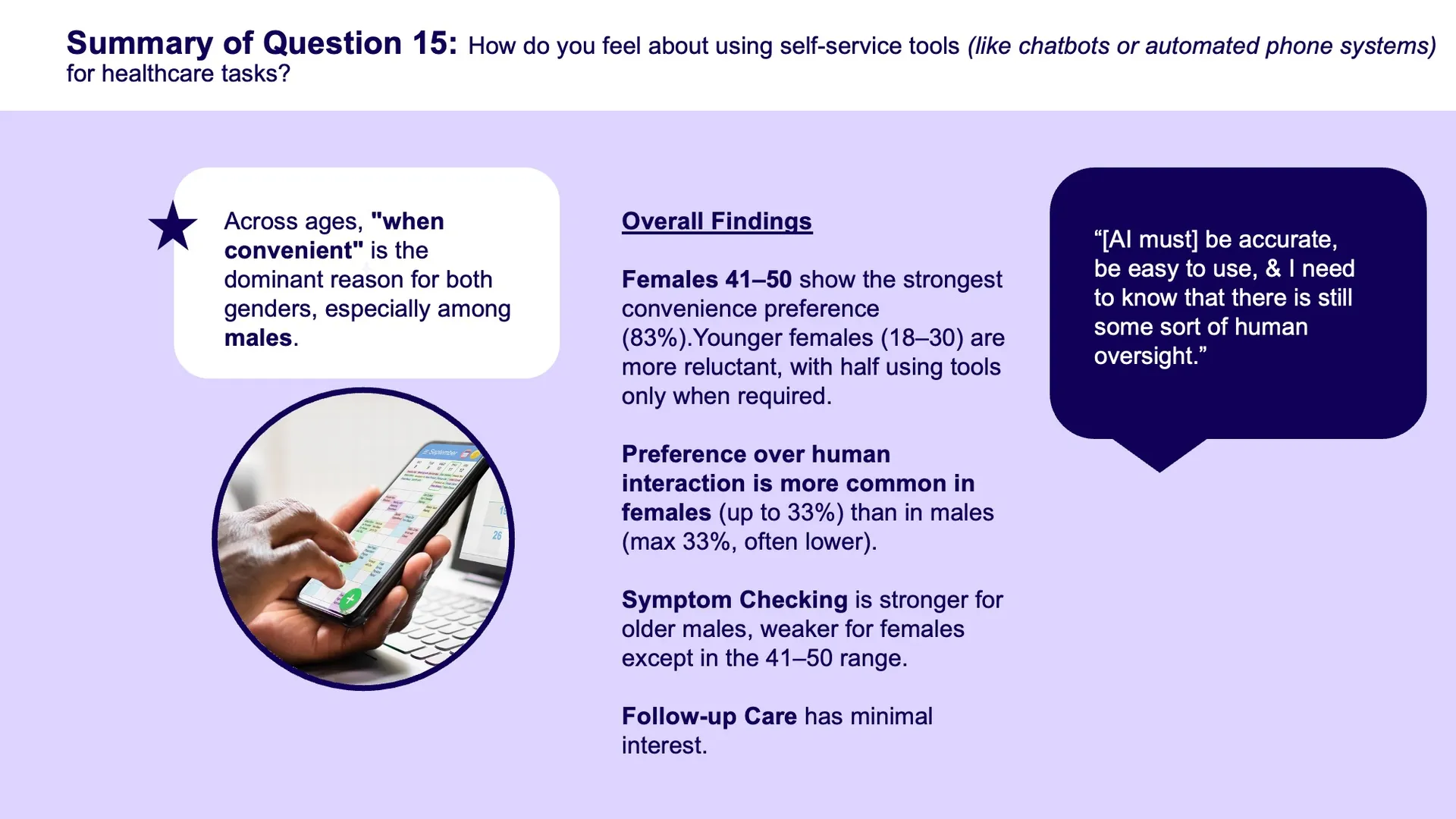

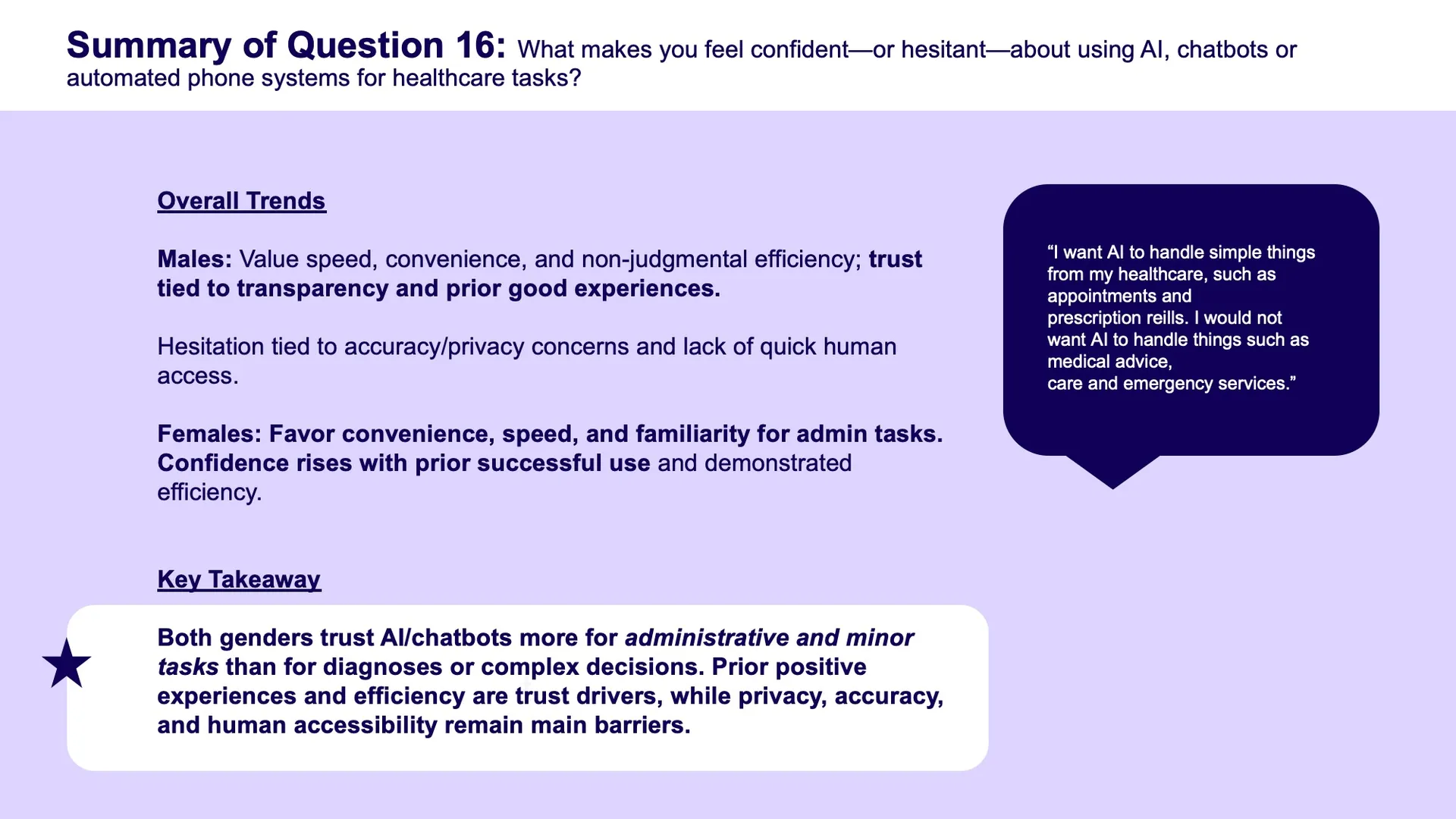

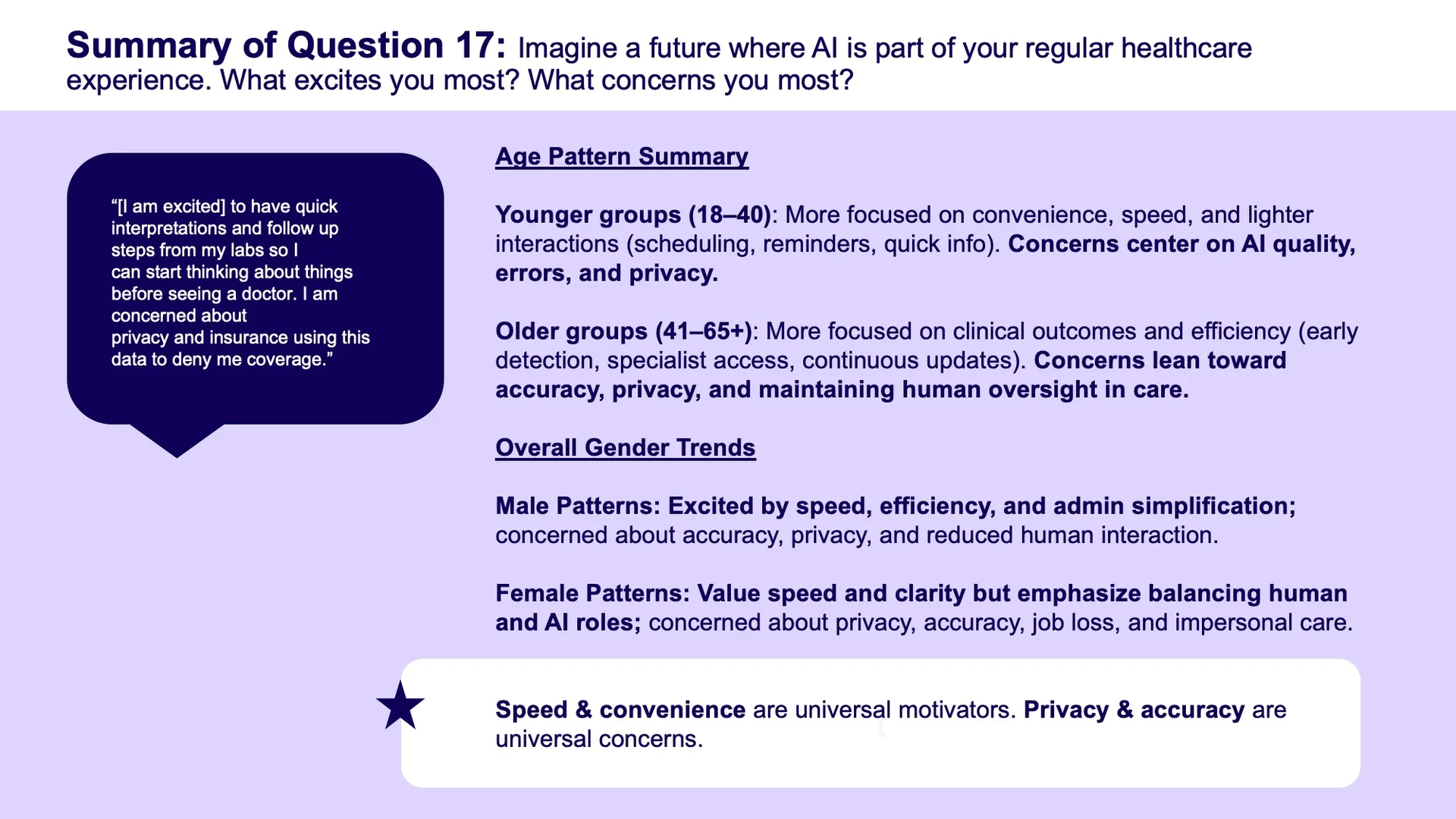

The team needed a deeper, evidence-based understanding of how patients perceive and trust AI in healthcare to ensure we designed responsible, user-centered AI experiences. We lacked clear insight into where AI could genuinely support care and where users felt uncertainty. Especially around accuracy, privacy, safety, and the role of human oversight.

Team

Principal Designer: Lisa Lord, Junior BA: Sydney Donavan, Product Manager: Carl Wayman

Solution

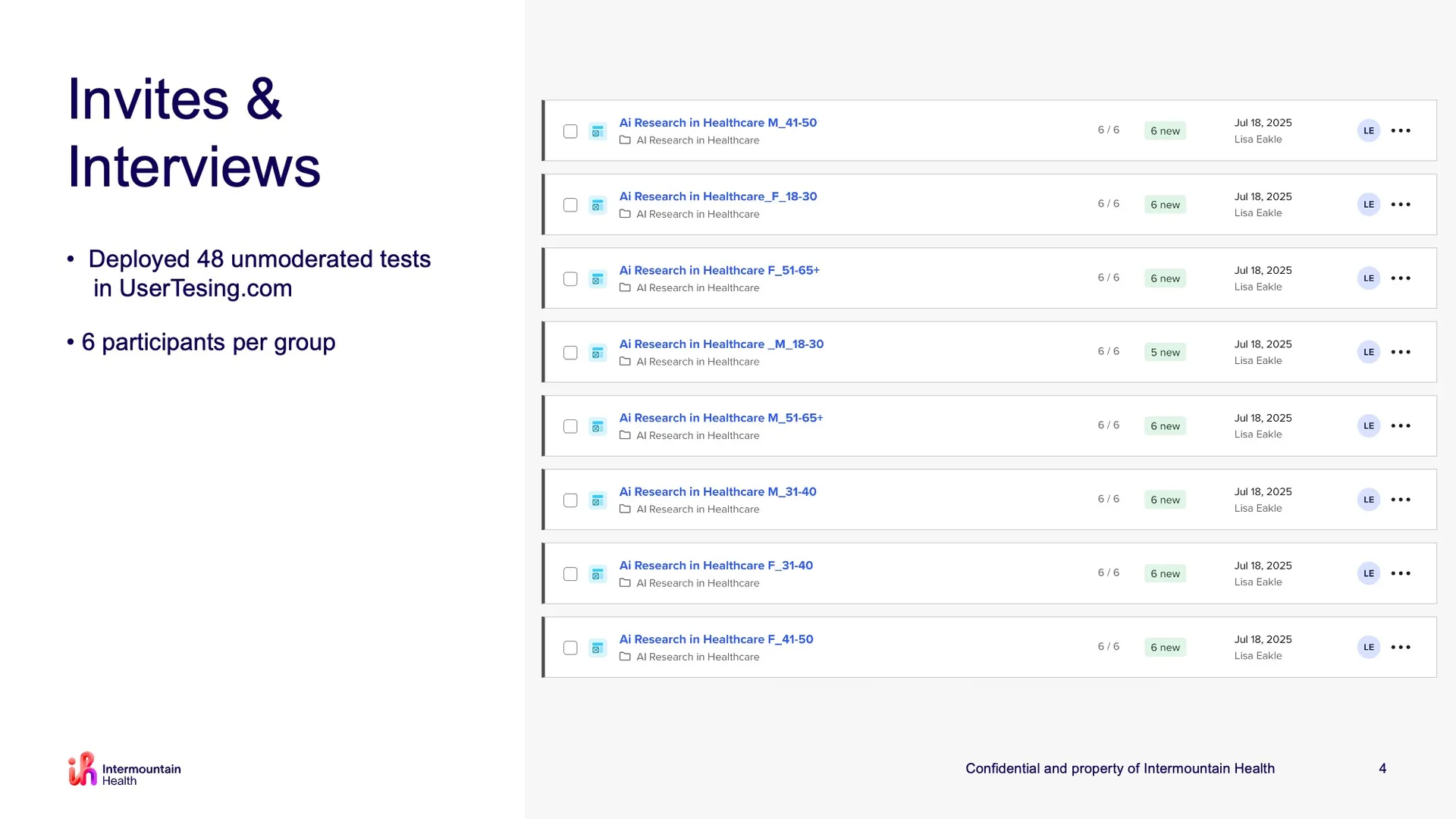

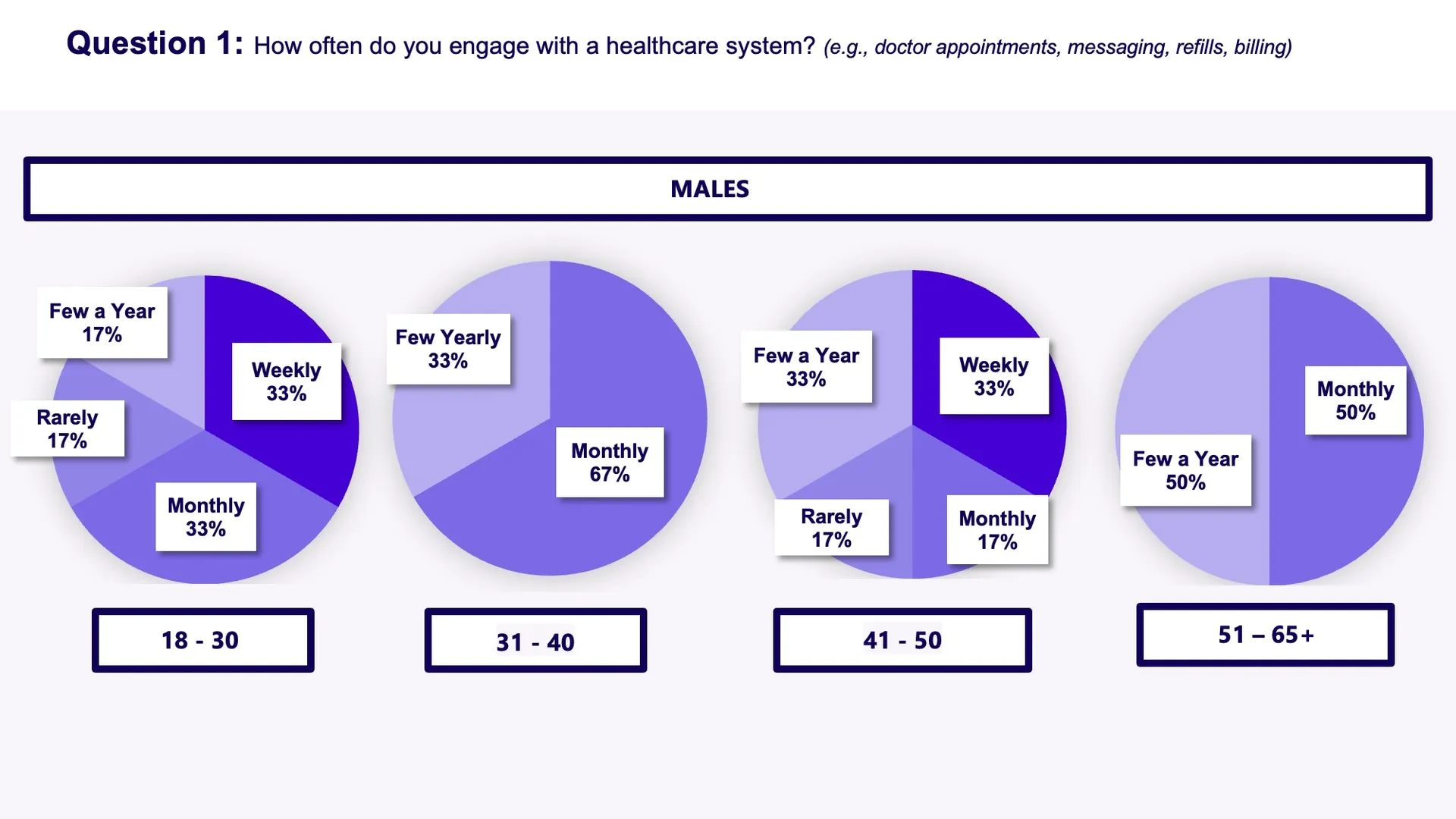

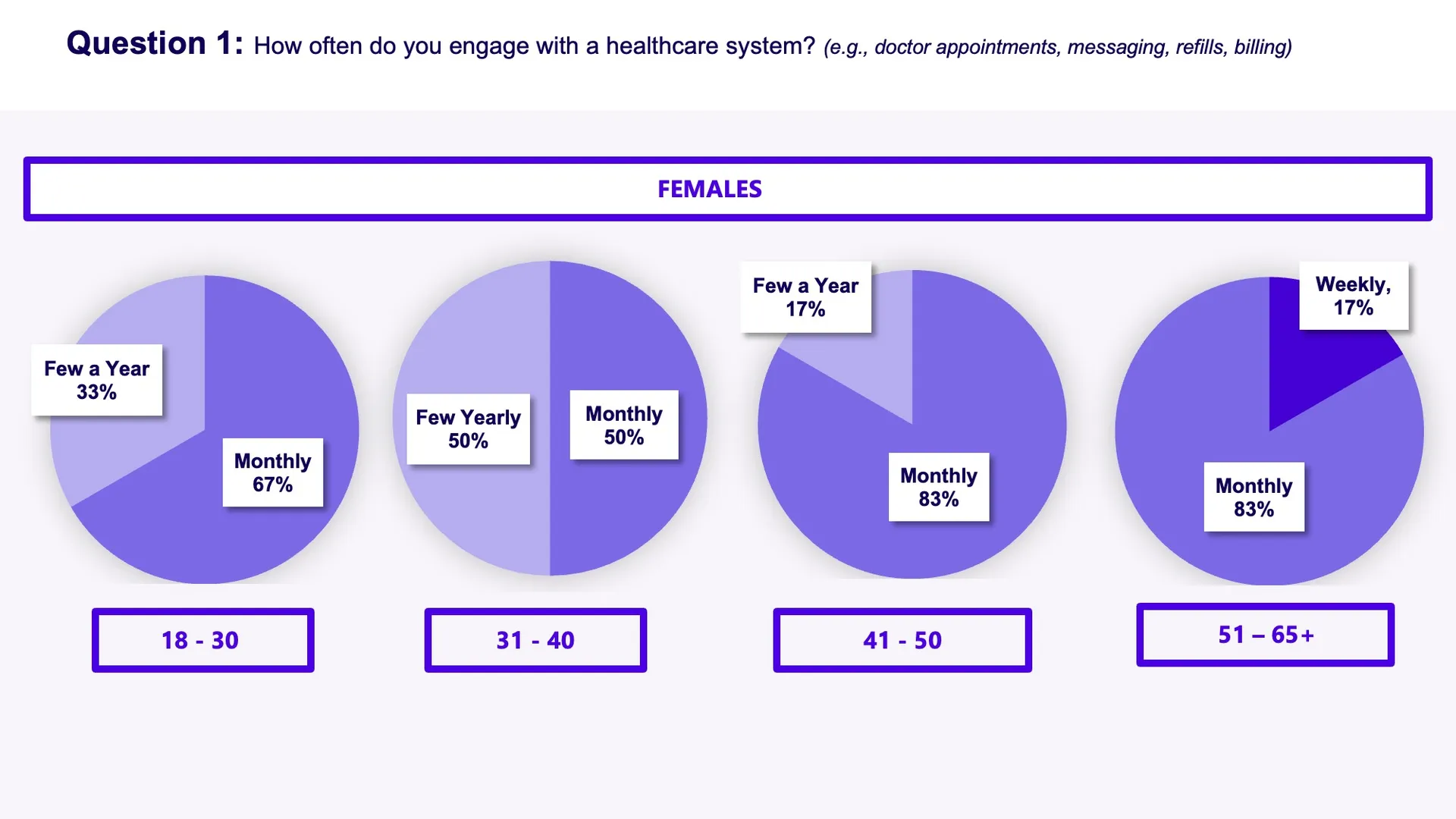

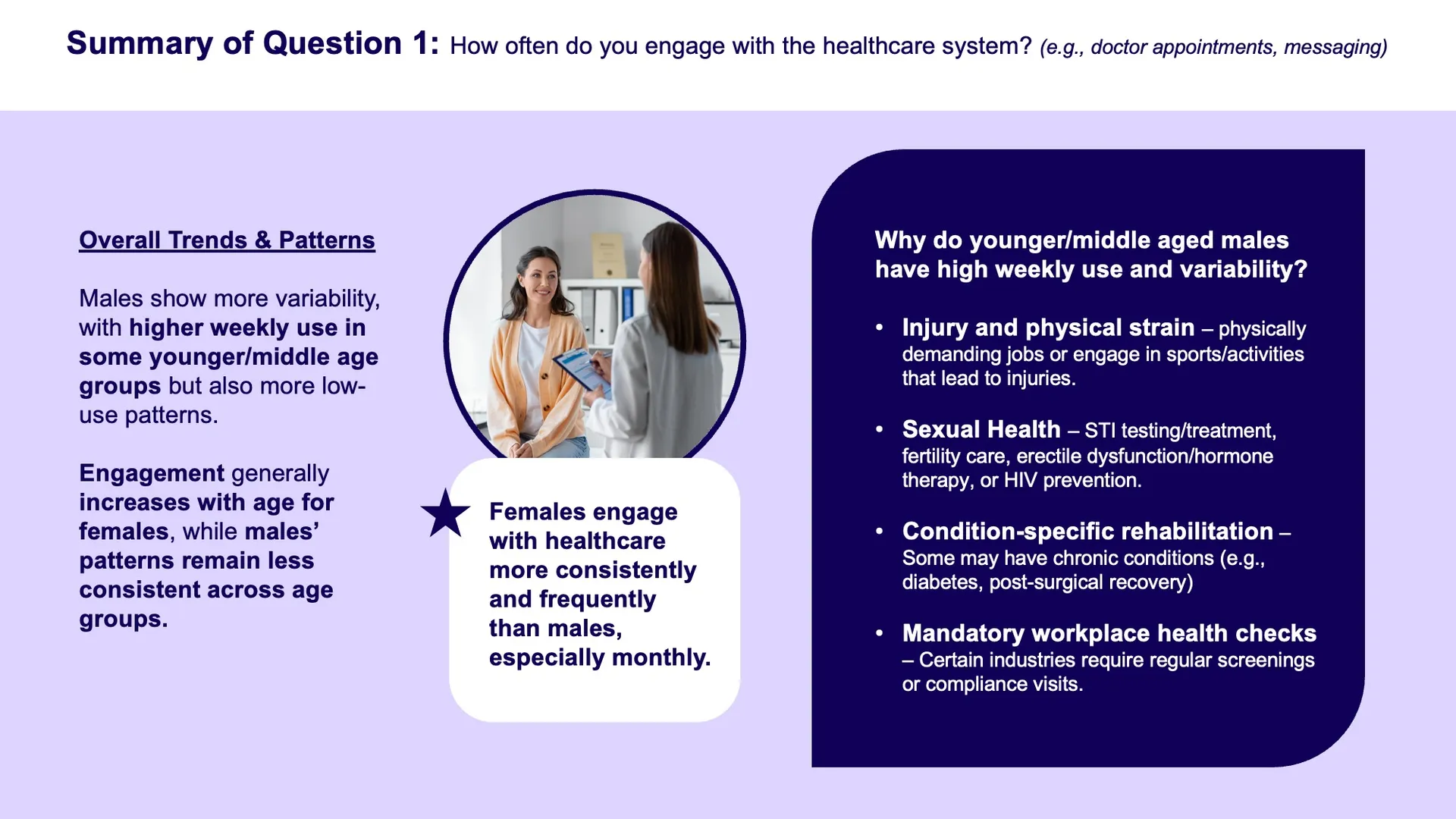

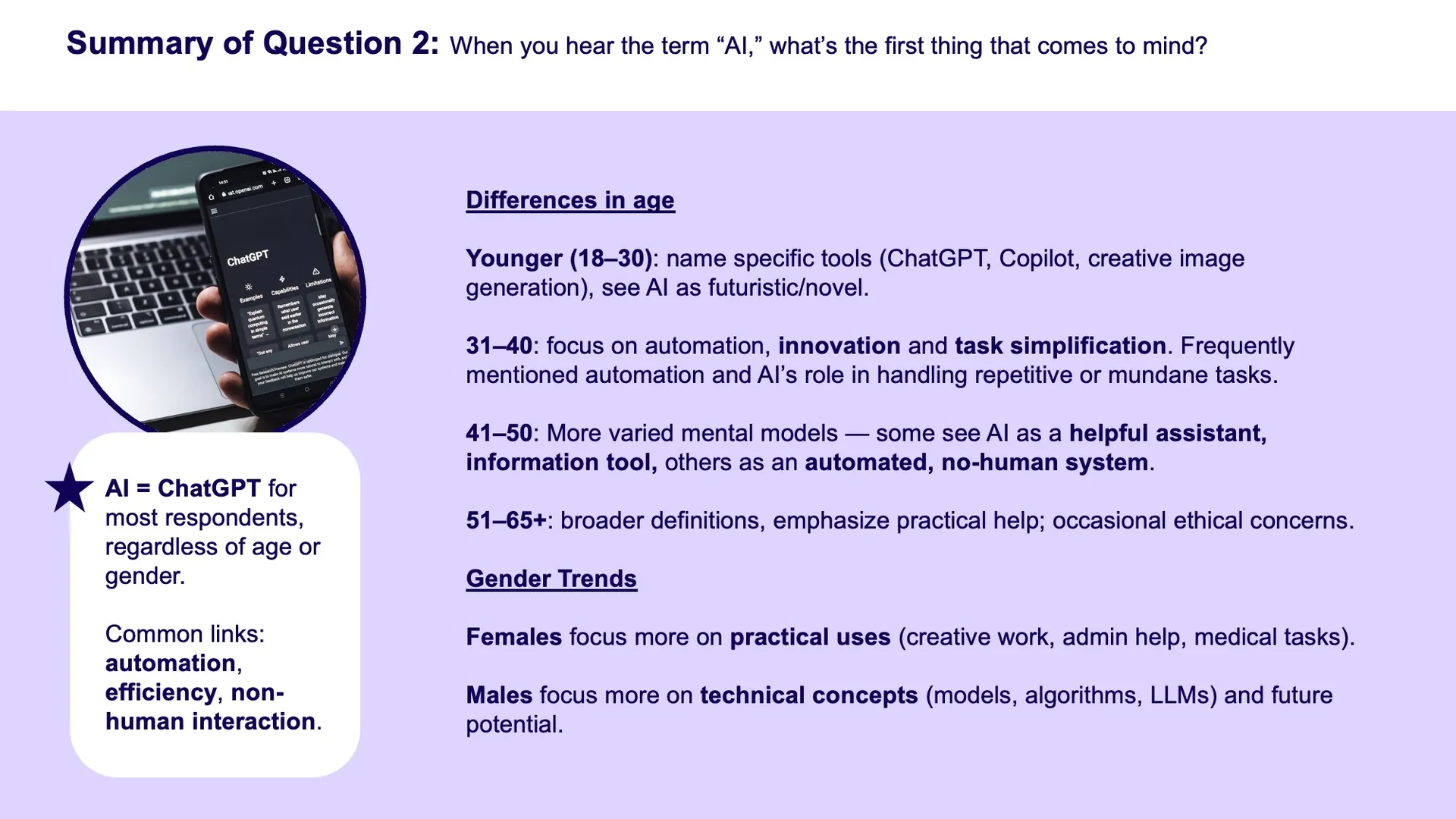

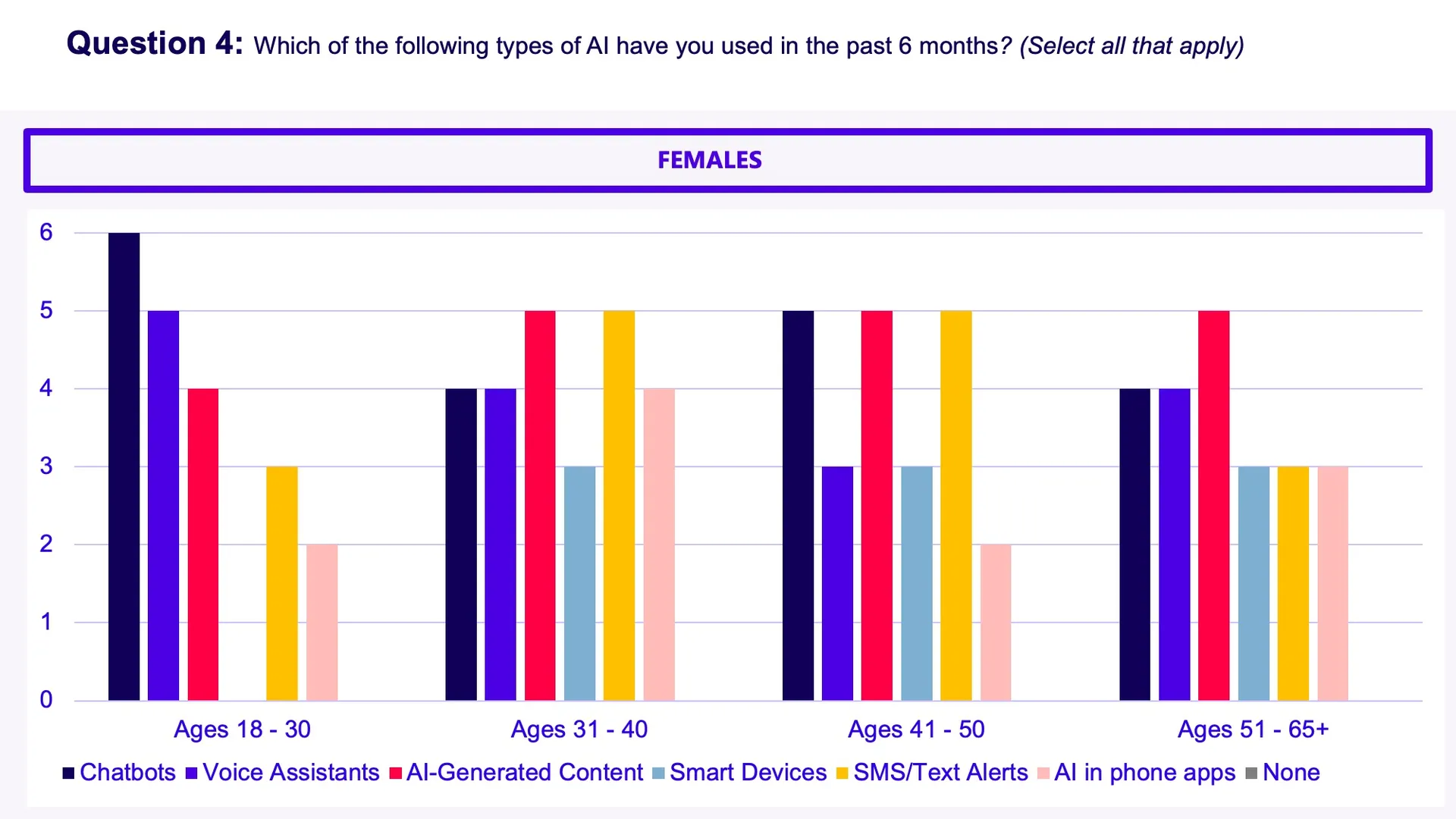

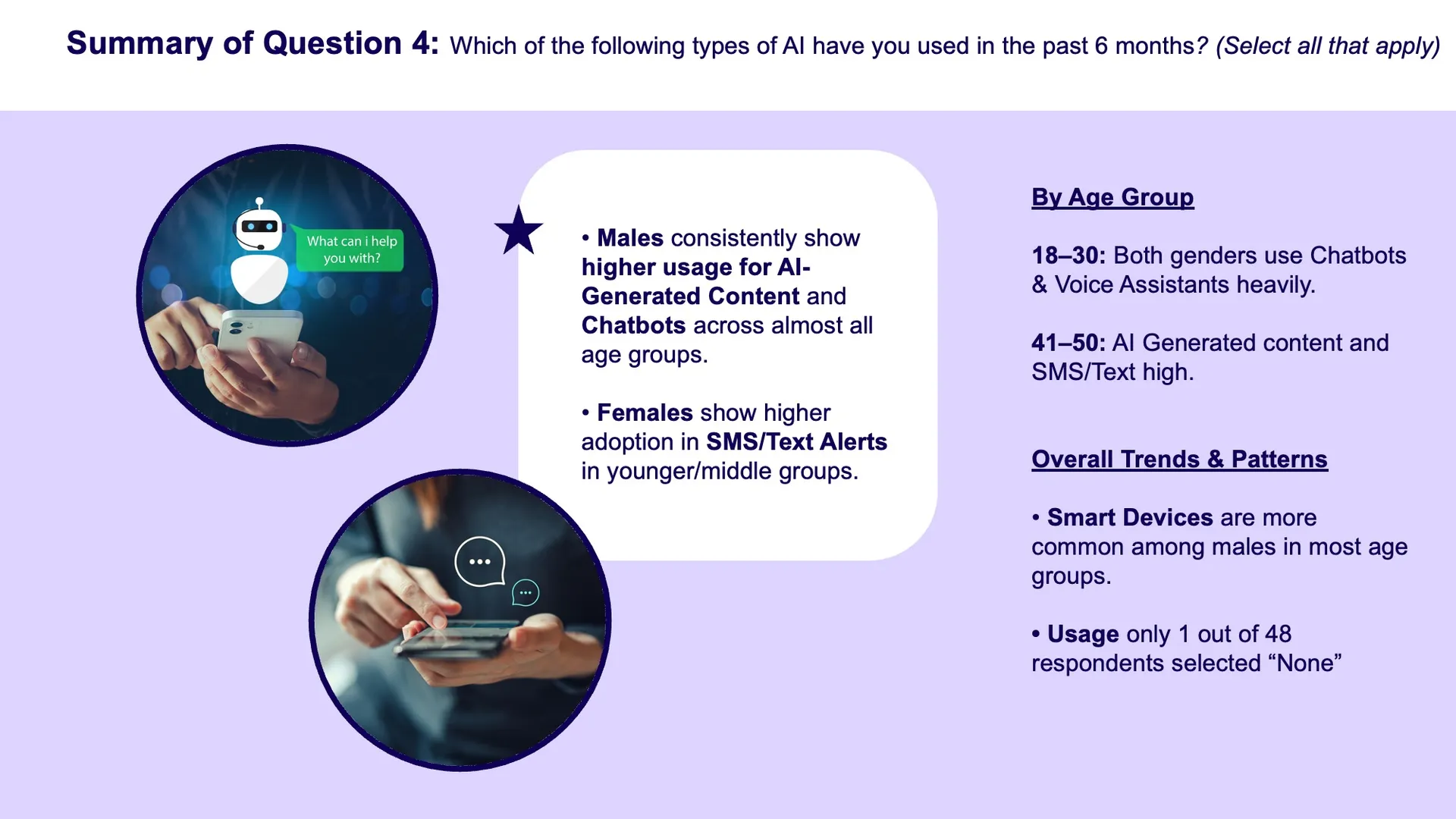

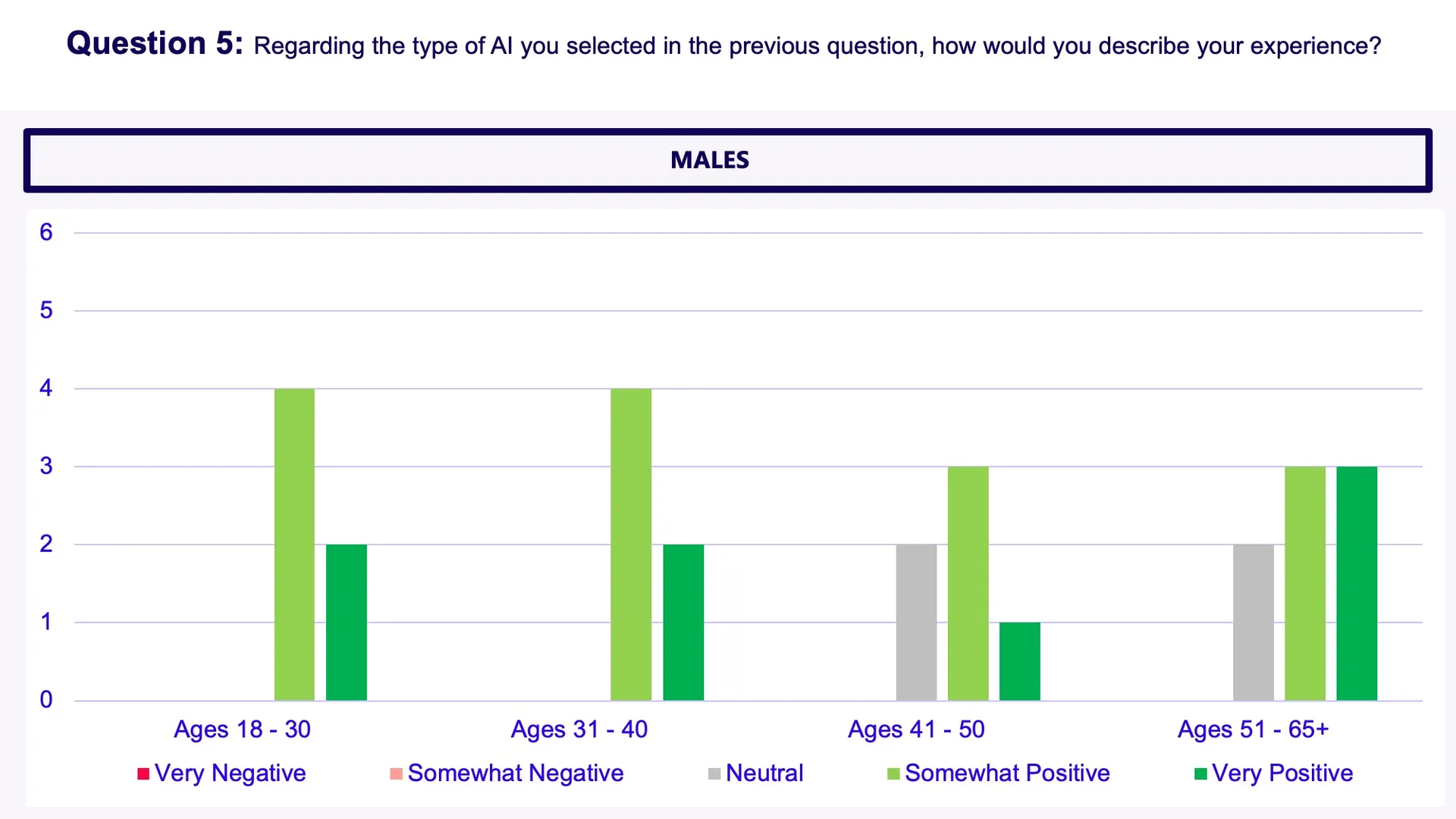

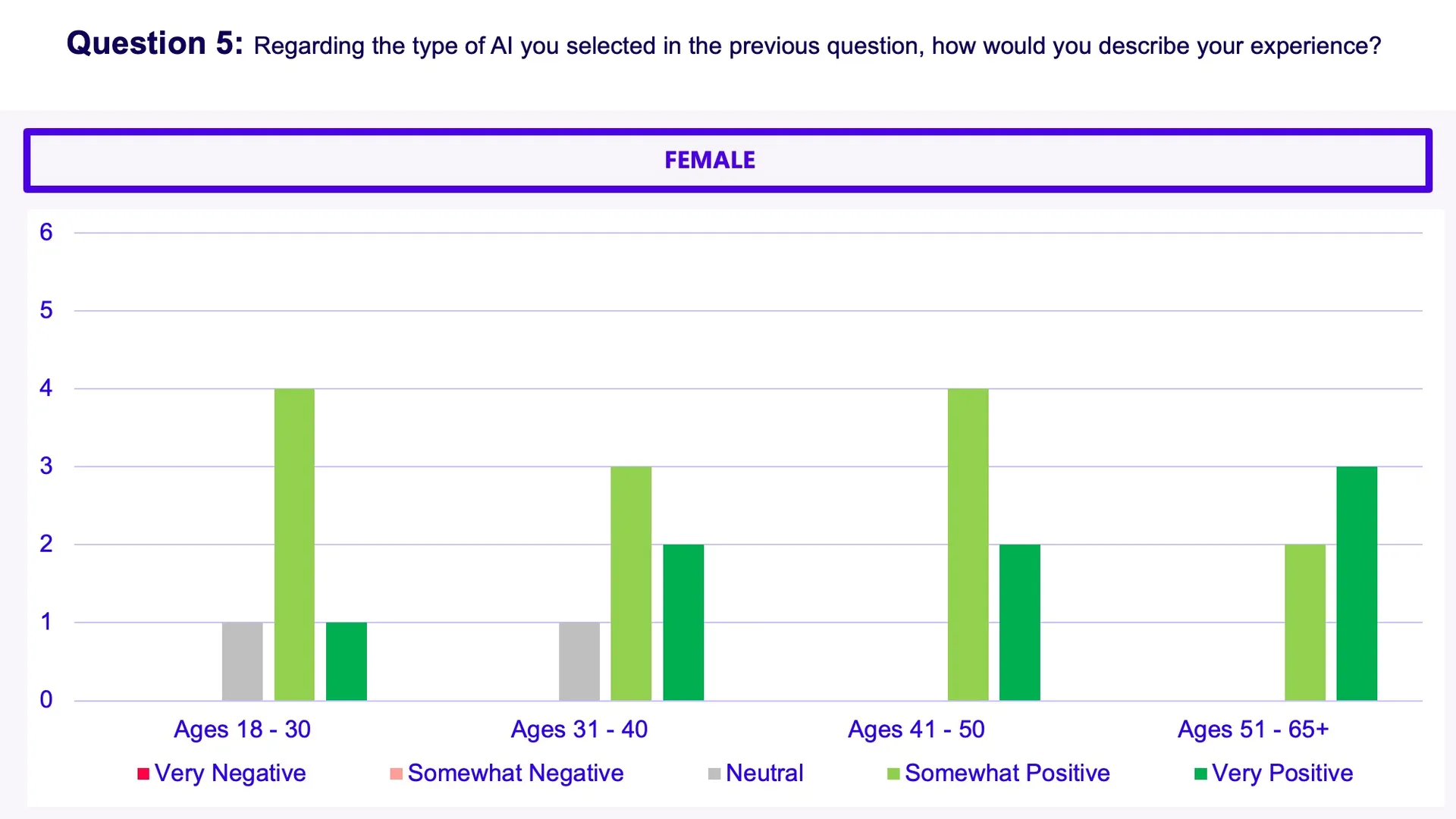

I led a structured research effort combining demographic analysis, unmoderated testing, and perception mapping to identify user expectations, trust drivers, and adoption barriers. I then created a comprehensive slide deck and accompanying training to share these findings with the larger digital team. This work now guides strategic decisions and aligns teams around designing safe, trustworthy, AI-enabled healthcare experiences.